Artificial intelligence in foreign language learning

How AI software is making its way into language learning

AI technology is now omnipresent and it’s hard to imagine teaching a foreign language these days without it. Here’s a look at the emerging field of natural language processing and potential uses (and abuses) of AI tools in language courses.

By Tobias Brockhorst

Technologies based on artificial intelligence (AI) are to be found in many domains of our everyday lives nowadays, including everything from personalized Internet search results and personalized ads on social media to digital voice assistants, smart home devices and facial recognition to unlock smartphones. For some time now, they’ve been catching on in language learning, too. In the following, we’ll be zeroing in on two subfields of natural language processing: machine translation and automatic text generation.

Human and machine translations are virtually indistinguishable

The use of translation software is now firmly established in language learning. When learners have a hard time understanding something, they often intuitively reach for their smartphones to look up the meaning of unfamiliar words and phrases. Unlike bulky printed dictionaries, a smartphone is usually at hand all the time, and much faster when it comes to looking up words. Many use translation software to produce foreign-language versions of texts they’ve written in their mother tongue.Until just a few years ago, machine translations were still readily recognizable as such. But research in translation technology has made enormous progress over the past decade, so nowadays it’s close to impossible to tell whether a translation was produced by a human being or a computer. Naturally, teachers often notice this when, say, learners with mediocre writing skills in the target language repeatedly submit written homework without a single grammar or spelling mistake.

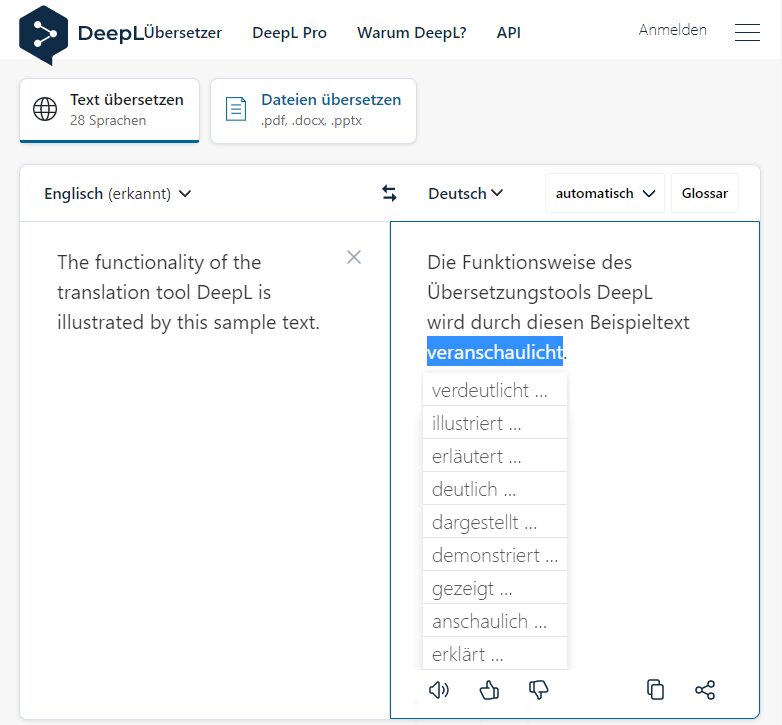

One example of the spectacular recent progress in AI translation is DeepL, a machine translation service developed by a Cologne-based company in 2017, now used by over a million people every day. DeepL draws on statistical data to determine the probability of certain words occurring in certain contexts. Taking several billion parameters into consideration, DeepL produces translations in currently 28 different languages.

The company regularly reassesses the quality of its translations. In 2017, for example, DeepL conducted a blind test in which a group of professional translators were asked to compare DeepL’s translations of 100 sentences with those of three rival machine translation services provided by leading tech companies. The average score for DeepL translations was triple the score for those by a well-known search engine’s machine translation service: the human translators felt they sounded much more natural.

Sample translation by DeepL Translate

| © DeepL

Sample translation by DeepL Translate

| © DeepL

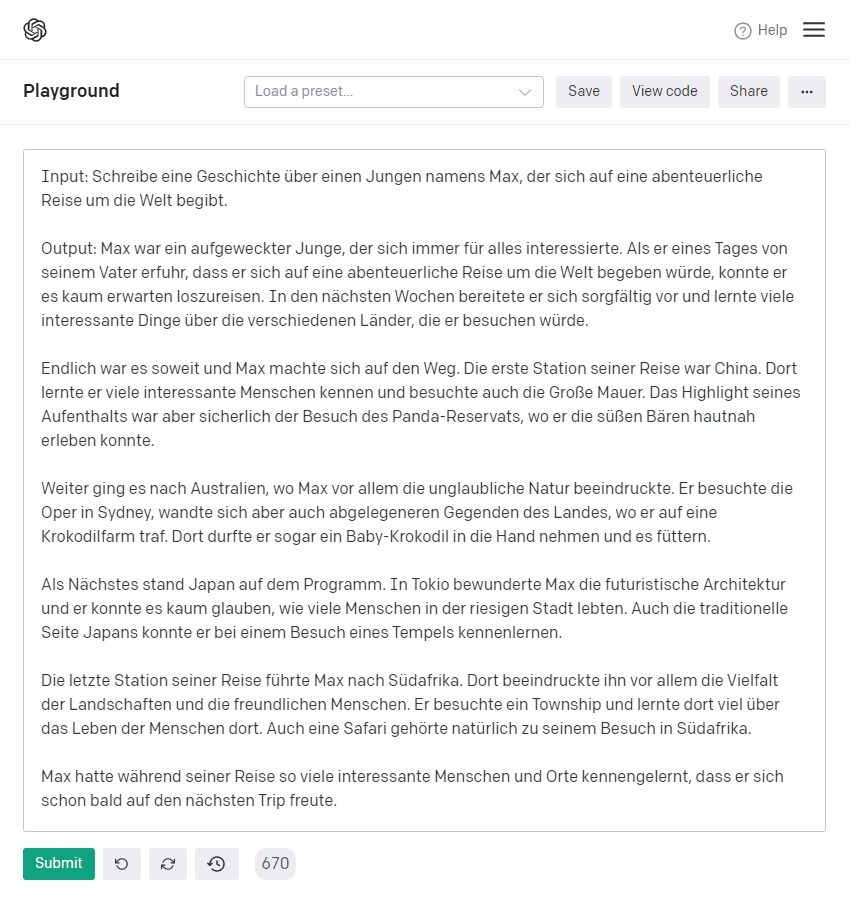

Using text generation for homework

Besides machine translation, another subfield of natural language processing, machine text generation, has made tremendous strides in recent years. And one provider that has repeatedly made headlines is OpenAI. Started up in 2015, this San Francisco-based organization does AI research and, among other things, develops algorithms that can compose and complete texts.Generative Pre-trained Transformer 3 (GPT-3), the latest such algorithm, was first described in a scientific paper in 2020. This machine-language model is currently the largest of its kind, trained on 45 terabytes (TB) of text data running through a neural network with 175 billion parameters. The software differs from conventional text generators in two respects: for one thing, it doesn’t use pre-existing blocks of text; for another, the algorithm is not specialized for use in any one particular field – it generates pertinent content about almost any topic. Like DeepL, the GPT-3 neural network is based on a statistical model that describes the probability of words and sentences occurring in a given context.

If you input a command like “Write a story about a boy named Max who sets off on an adventurous trip around the world”, the GPT-3 algorithm will start right in turning out a story along exactly those lines – in any of several different languages. And yet the algorithm doesn’t know anything about the world, it’s just very good at predicting what the next word or sentence might be. And this is how text generators are gradually creeping into language learning, since it’s obviously much easier for learners to have an automated text generator write an essay or dialogue about a given topic for them than to write it themselves.

Sample story by OpenAI GPT-3 Playground

| © OpenAI

Sample story by OpenAI GPT-3 Playground

| © OpenAI

The more useful the tool, the greater its potential for abuse

Text generators can be used not only to “cheat” on writing assignments in a language course, but also for more dubious, even criminal, purposes. They make it easy to generate unique spam and “phishing” messages as well as various other kinds of Internet fraud, including “sweepstakes scams” and “romance scams”. Fake news and even academic papers can be easily forged too – potentially contributing to the spread of misinformation and disinformation.Another risk involved in using AI-based translation and text generation tools is their tendency to spit out problematic content and foul language. If vulgarities or racist, sexist or religious stereotypes occur in the software's training data, there’s always a risk of their being used or reproduced in machine-generated output.

The more useful the AI tool, the greater its potential for misuse and abuse. So end users need to be mindful of their responsibility to use the software and check the output carefully.

Sensible use in teaching

How can teachers use AI tools constructively and raise awareness of the need for responsible use of technology? One way is to actually work on machine-translated and -generated texts in class in order to heighten metalinguistic awareness among learners and show them the software’s limitations.In machine translation even from languages like English and Spanish, the programs have a hard time with genders as well as omitted personal pronouns, so there’s plenty of room for error. Machines also often have trouble translating metaphors and allegories whose wording is worlds apart from their actual meaning. And they don’t always strike the right note in terms of context, addressee and linguistic register. Having learners fix semantic, grammatical and stylistic errors and irregularities in a machine-translated text can heighten this awareness.

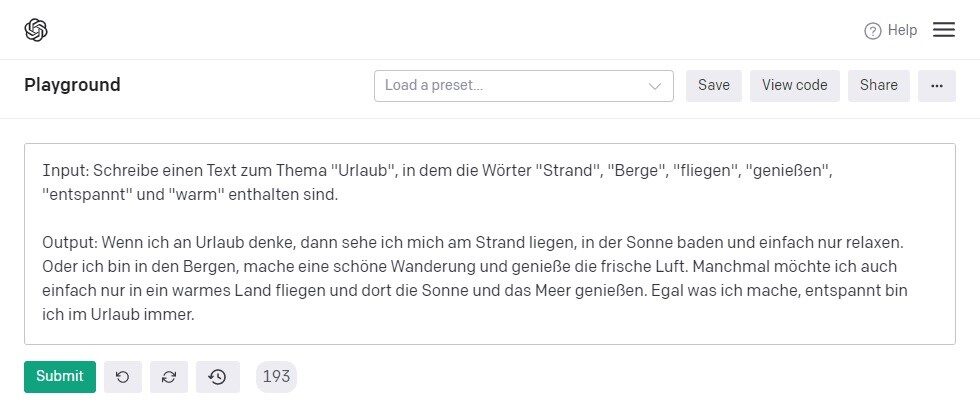

This is also a good way to work AI text generators into language teaching. Once again, text generators don’t know anything about the world, they merely generate word sequences based on statistical data, so the output doesn’t always come up to expectations. For example, although text generators can readily spit out a fantasy story with a specified plot, they have a much harder time writing non-fiction about specialized technical subject-matter. They’re also prone to the same stylistic errors as machine translators. So one way to work with machine-generated texts in the classroom is to have learners revise them in various ways – whether to adjust them to a certain register or reader, or to meet certain requirements as to content in non-fiction writing.

You can also use text generators to prepare for class. To introduce new vocabulary, for example, you can give the algorithm a certain topic or theme and some words to be included in the output, and the algorithm will come up with a text showing how to use the new words in a sentence.

Sample text generated by OpenAI GPT-3 Playground to introduce new German vocabulary

| © OpenAI

To sum up, AI technologies have great benefits to offer – and plenty of potential for misuse as well. But teachers can develop strategies to make sure they’re used responsibly and constructively.

Sample text generated by OpenAI GPT-3 Playground to introduce new German vocabulary

| © OpenAI

To sum up, AI technologies have great benefits to offer – and plenty of potential for misuse as well. But teachers can develop strategies to make sure they’re used responsibly and constructively.Faes, Florian (2017): Why DeepL Got into Machine Translation and How It Plans to Make Money. Slator – Language Industry Intelligence. Accessed 5 July 2022. https://slator.com/deepl-got-machine-translation-plans-make-money/.

Raveling, Jann (2022): Was ist ein neuronales Netz?. Wirtschaftsförderung Bremen GmbH. Accessed 5 July 2022. https://www.wfb-bremen.de/de/page/stories

/digitalisierung-industrie40/was-ist-ein-neuronales-netz.

Romero, Alberto (2021): Understanding GPT-3 in 5 Minutes. TowardsDataScience. Accessed 5 July 2022. https://towardsdatascience.com/understanding-gpt-3-in-5-minutes-7fe35c3a1e52.

Sciforce (2021): What is GPT-3, How Does It Work, and What Does It Actually Do?. Medium. Accessed 5 July 2022. https://medium.com/sciforce/what-is-gpt-3-how-does-it-work-and-what-does-it-actually-do-9f721d69e5c1.

Smolentceva, Natalia (2018): DeepL: Cologne-based startup outperforms Google Translate. DeutscheWelle. Accessed 5 July 2022. https://www.dw.com/en/d

eepl-cologne-based-startup-outperforms-google-translate/a-46581948.