Recommendations by a quantum physicist

What is Quantum Computing?

Quantum physicist Anil Murani selects five insightful articles and videos that will introduce you to the world of quantum computing.

A short guide to quantum technologies

Quantum computing is a very exciting and active field in physics. It has gained a significant amount of public attention in recent years due to the involvement of companies and startups, which promise to develop a computer capable to outperform even the most powerful computers on the planet with a fairly low number of quantum bits (a.k.a qubits). But what is quantum computing exactly? What are its promises and applications? How far along are we in building this computer? In this post, I will walk you through some introductory content to help you answer these questions, and understand a bit more the concepts and the stakes of quantum computing. I have chosen resources that are easy to access and serious at the same time, available online and as current as possible.

The map of quantum computing - Dominic Walliman, DoS (30 min)

When starting to learn something new, one is often overwhelmed by the task: where should I start? what should I know? what is important? It is often quite useful to decompose it into a multitude of interdependent “bricks” of knowledge, easier to digest individually. This thirty minute video will provide you with a basic introduction to the concepts, motivations and state of the art of quantum computing. It uses mind mapping, a powerful graphical technique that represent the connections between different bricks of knowledge required to understand a topic. In this video, you will be walked through a very synthetic map, backed with simple explanations of concepts such as quantum entanglement or circuit gates, yet made in a not too simplistic way. The author of the video, Dominic Walliman, is a physicist who used to work in the field. He also produced other similarly nice content to help popularizing physics on different topics such as black holes, superconductivity and Feynman diagrams.

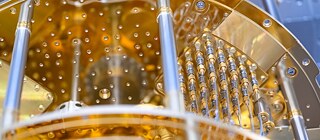

Hello quantum world! Google publishes landmark quantum supremacy claim, Elizabeth Gibney

In 2020, the Google team lead by John Martinis, announced that they were able to demonstrate “quantum supremacy” on their 53 superconducting qubit chip named Sycamore, a milestone that was widely publicized beyond the scientific community. What the term “quantum supremacy” means is that their processor was capable of performing a task in a short amount of time (a little bit more than 3 minutes), that the most powerful classical supercomputer could only achieve in some 10000 years.A criticism could be that what Sycamore managed to perform (sampling the output of a random grid) is not currently offering any advantage for practical computations. Moreover, it was later shown that the amount of classical resources needed to reproduce their result was far less than initially thought. Nevertheless, it marked a turning point in the community and demonstrated that nothing fundamentally bad happens when scaling from 1 to 53 qubits in terms of number of errors, although much more progress will be required in the future in order to perform actual quantum computations. This milestone was compared to the first flight of the Wright brothers by Will Oliver, a prominent quantum physicist at the Massachusset Institut of Technology. It neither was a flight faster than a horse, nor it fundamentally ended the way shipment was done. But it marked the advent an entirely new technology, that would only realize its full potential many years after.

This result, published in Nature the same year has been backed by a companion article and a video from Elizabeth Gibney, made for a broader audience. In her article, she details the experiment and explains what it really achieves.

The Story of Shor’s Algorithm, Straight From the Source | Peter Shor, Qiskit

Perhaps the most iconic application one thinks about when asked what could a quantum computer do is Shor’s algorithm. Take two large prime numbers, and multiply them. The result of the multiplication can be done in a short amount of time. On the other hand, starting from the product and finding what these two prime numbers were, is a notably harder task and would take a large amount of time; all the more so if those numbers are large. It is in fact the basis of the ciphering of our online banking transactions still nowadays: they rely on the practical impossibility to factor integers when the number of digits is too large.In 1994, Peter Shor found an algorithm based on qubit operations that allows one to solve the integers factoring problem in a polynomial time, meaning that the problem does not become intractable as the number of digits increase. This means that if one would be possessing a sufficiently powerful quantum computer, one would be able to break these ciphering methods in a reasonable amount of time.

Recently, Shor gave an interview for Qiskit, a platform hosting useful resources on quantum computing. In this video, he comes back on the history of his research that lead to this major discovery. He also explains the invention of quantum error correction, which means how one can correct errors happening during a quantum computation while keeping the purity of quantum states. This video is a testimony of a great scientist and pioneer in quantum information science.

How Quantum Computers Will Correct Their Errors - Katie McCormick, Quanta Magazine

Nowadays, classical computers use solid state processors based on semiconductors - materials in which one can make miniaturized transistors. These processors perform computations on information that is encoded in bits (0 and 1) stored physically on a chip. From time to time, a bit of information can flip randomly from 1 to 0 or 0 to 1 during the process, for example due to a cosmic ray or background radiation hitting the chip, yielding the wrong result at the end of the computation. Several strategies exist to overcome these errors, using redundant encoding of information. For example, by copying a bit multiple times at multiple places in the processor, one can define a logical bit. One then regularly measures all the copied bits to check if there was an error, and if so, one resets them to their majority value. This effectively reduces the probability of failure compared to one single physical bit.Obviously, quantum computers are also prone to errors, but could one still rely on redundant encoding to detect and correct errors? There is a major complication to this strategy: one cannot measure a state without irreversibly destroying the quantum information it contains! In other words, copying the state of a qubit is forbidden: this is the non-cloning theorem. Besides, qubits have an additional type of error than bit-flips that needs to be corrected: phase flips that flip |0⟩+|1⟩ into |0⟩-|1⟩ and vice versa.

This article and the video from Katie McCormick in Quanta magazine explains the concept of quantum error correction. The idea was born in 90s; Peter Shor greatly contributed to it. It explains how can one build a well thought encoding on physical qubits can be used to build logical qubits.

Quantum computing 40 years later - John Preskill

How did the idea of quantum computing emerge? It began in the 80s when the famous physicist Richard Feynman tried to simulate quantum systems such as a molecule using a classical computer. He quickly found that the amount of classical resources required to simulate the system would scale exponentially as the system size (for instance the number of atoms in the molecule), which makes the computation impossible even today for simple molecules such as caffeine. Feynman then formulated another approach to the problem : if one could program an artificial quantum system such that it effectively simulates a quantum system from nature, then the amount of quantum resources required would scale like the size of the simulated system. The quantum computers we are building now, that use quantum as a resource to perform powerful general computations, are not fundamentally different from what Feynman envisioned forty years ago.This review article was written by John Preskill, a prominent theoretical quantum physicist from Caltech university. It is available on arXiv, which is a server dedicated to host scientific pre-publications, widely used in the quantum physics community. This article explains the core concepts of quantum computing in great details, but also the context and stakes of the field. I will address a warning here, that there are a few mathematical formulas in the text, which are used to illustrate important concepts. The mathematical formalism is quite central in quantum mechanics, and it is hard to avoid its use if one wants to enter into its fundamental concepts. However, if you feel uncomfortable about these, you can skip sections 3 to 7 which are the most technical. Finally, this article is also a moving tribute to Richard Feynmann, whom Preskill met personally when he began his carrier as professor at Caltech.