When AI art and commerce collide

Computers can produce surprising, beautiful art – they can also, in pursuit of profit, be made to sap our attention, strip our privacy and polarize our politics. Two artists grapple with a technology built on high ideals but used to sell ads.

Ultramarine blue is a shade so striking, and so expensive, that Michelangelo once abandoned an artwork because he couldn’t afford enough of it. Johannes Vermeer threw his family into debt so he could, and then used it to paint the headscarf in his most famous work, Girl with a Pearl Earring. An Italian painter from the Middle Ages once wrote: “Ultramarine blue is a glorious, lovely and absolutely perfect pigment beyond all the pigments. It would not be possible to say anything about or do anything to it which would not make it more so."

In 2014 the mines were seized by a warlord, the latest in a long history of violent takeovers. The money generated since has been used to corrupt politicians and fund the Taliban. None of this changes the colour and beauty of ultramarine, but it does – it must – change something.

Sassoferrato’s "The Virgin in Prayer" and Johannes Vermeer’s "Girl with a Pearl Earring" both feature ultramarine blue

| Photo credits: Niday Picture Library & Alamy Stock Photo / Ian Dagnall & Alamy Stock Photo

Sassoferrato’s "The Virgin in Prayer" and Johannes Vermeer’s "Girl with a Pearl Earring" both feature ultramarine blue

| Photo credits: Niday Picture Library & Alamy Stock Photo / Ian Dagnall & Alamy Stock Photo

Computation isn’t often associated with beauty, but perhaps it should be. One place you can start the history of computation is in 1950, with the publication of Alan Turing’s Computing Machinery and Intelligence. Turing was a visionary; his paper tried to answer the question of whether computers could think – a profound, challenging interrogation that excited both philosophers and engineers.

Then there are the computer artists, who have for years been striving to fulfil computation’s promise of beauty – using the same machines, sometimes the same algorithms, that are now facing backlash as tools of commercial surveillance, attentional exploitation, and bias. Does that change anything?

“Yeah, this is the kind of stuff that I think about,” says Memo Akten, a Turkish artist, currently based in London.

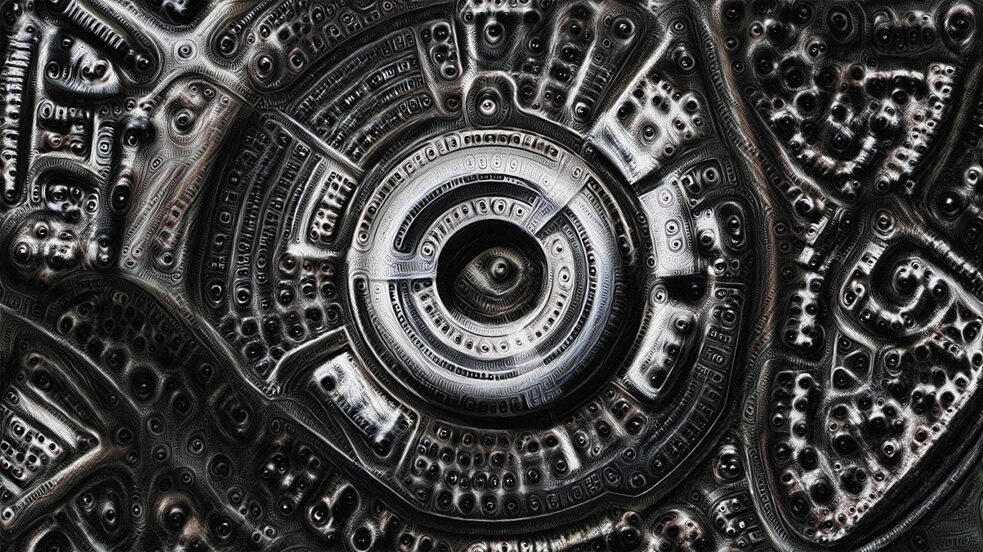

In 2015, Akten made an image using a Google-developed tool called DeepDream – he ran a satellite image of the UK’s spy headquarters through the algorithms to generate an image of an eye in the middle of a hallucinatory, bubbling grey landscape. The effect is creepy and repulsive; which is to say, it’s a good piece. Algorithms that have been around for decades are suddenly popular, Memo wrote at the time, and that progress “is undeniably fuelled by the multi-billion dollar investments from the purveyors of mass surveillance.”

In the artwork the medium was twisted back on itself; the snake nibbling its own tail. A year later, when Akten was granted a residency with Google’s newly formed Artists + Machine Intelligence program, he used the time to build a Twitter bot which illustrated the gender biases language algorithms learn from our everyday speech.

Art is one of the ways norms can be shifted, Akten says. “A few years ago, maybe this issue of bias wasn't discussed, or even if it was just like, ‘Yeah yeah, that's just the way it is.’ But now it's kind of becoming unacceptable, it's become accepted that it's unacceptable. So this is what I think art can do.”

Memo Akten's "All watched over by machines of loving grace"

| © Memo Akten

Memo Akten's "All watched over by machines of loving grace"

| © Memo Akten

Akten, however, also brought up another way art intersects with tech companies; publicity. Programs like Google’s Artists + Machine Intelligence (AMI) could possibly give support to artists “in a way that is controlling the message that is going out to the mainstream,” he says, carefully. It would be a problem, he admits, if Google decided to fund and promote only artists whose work was inoffensive and non-critical.

On a recent Zoom panel hosted by Goethe-Institut, Kristy H.A. Kang, an assistant professor in the School of Art at Nanyang Technological University, said she understood why modern artists are working with tech giants like Google.

“I don’t really hold the opinion that artists should not be working with big tech. I think it really depends.” Kang pointed out that many industries sponsor art, and that it’s possible these engagements can lead to a fruitful convergence between different perspectives. “The only thing you really need to be aware of is, what is the intent of this?” Kang says. “Is this going to be as a way of exploiting the artist’s labour, or whoever, or is this really on equal footing?”

Mike Tyka is an academic and artist and was one of the founders of the AMI project. Speaking from the US west coast, he agreed that if Google was using its money to control the narrative around AI technology, it would be concerning. But, he said, Google simply doesn’t have that kind of dominance over the AI art world. (Akten made a similar point, and noted that he did not encounter any censorship while working on his AMI project.)

And while Tyka has no problems with people making art for purely aesthetic reasons, he says, “if your piece ends up glorifying a technology that has a huge dark side, and you don't acknowledge that dark side, it's reasonable to criticise the artwork as naive or ignorant at best and negligent at worst.”

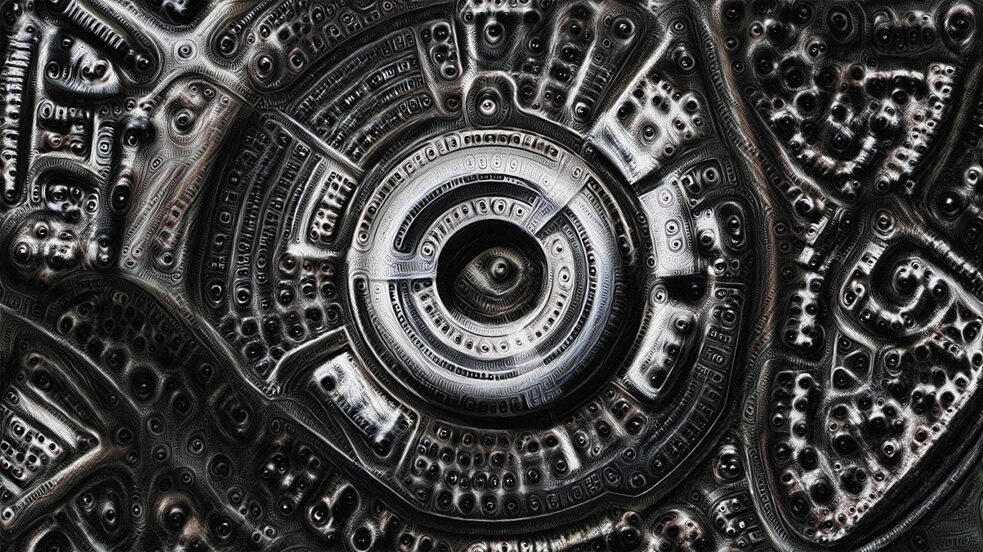

"Us and them" from Mike Tyka features thousands of tweets from Russian bots

| © Mike Tyka

"Us and them" from Mike Tyka features thousands of tweets from Russian bots

| © Mike Tyka

“It's very easy to make these technologies look awesome,” Akten says, “because they are awesome. It's also very easy to make these technologies look terrifying, because they are terrifying as well. And so it is a complicated discussion to be had about what this technology is and where it's going.”

Art can handle complexity. After Brexit and the Trump election, Tyka trained a neural network on hundreds of thousands of tweets from Russian bots, and combined it with another network that generated realistic images of non-existent people. He rigged twenty printers to a gallery ceiling in a circle, and had them print twenty long streams of fabricated tweets from fabricated people, creating what can only be described as a descending hell-circle of apocryphal nonsense and propaganda.

Then, underneath the printers, inside the circle of fallen information, Tyka placed two chairs. “It’s an invitation to sit down and have a real conversation, face to face, with another human,” he says.

Let me try, though. ‘Ultramarine’ comes from Latin, meaning ‘beyond the sea’: before a synthetic version was invented in the 1800s, ultramarine pigment was made by grinding lapis lazuli gems, sourced from one inhospitable valley in Afghanistan. “If you do not wish to die,” a representative of the East India Company once wrote, “avoid the Valley of Kokcha.”

In 2014 the mines were seized by a warlord, the latest in a long history of violent takeovers. The money generated since has been used to corrupt politicians and fund the Taliban. None of this changes the colour and beauty of ultramarine, but it does – it must – change something.

Sassoferrato’s "The Virgin in Prayer" and Johannes Vermeer’s "Girl with a Pearl Earring" both feature ultramarine blue

| Photo credits: Niday Picture Library & Alamy Stock Photo / Ian Dagnall & Alamy Stock Photo

Sassoferrato’s "The Virgin in Prayer" and Johannes Vermeer’s "Girl with a Pearl Earring" both feature ultramarine blue

| Photo credits: Niday Picture Library & Alamy Stock Photo / Ian Dagnall & Alamy Stock Photo

Binary beauty

Computation isn’t often associated with beauty, but perhaps it should be. One place you can start the history of computation is in 1950, with the publication of Alan Turing’s Computing Machinery and Intelligence. Turing was a visionary; his paper tried to answer the question of whether computers could think – a profound, challenging interrogation that excited both philosophers and engineers.

But what began as an attempt to explore the mind has been transformed into an engine of commerce. Among its other uses (in medicine, academic research, language), so-called artificial intelligence has been put to work to entice users to watch more YouTube, or to influence the behaviour of Facebook users – both for the purpose of selling microtargeted ads. “The best minds of my generation are thinking about how to make people click ads,” a Facebook engineer said in 2011. “That sucks.”

Then there are the computer artists, who have for years been striving to fulfil computation’s promise of beauty – using the same machines, sometimes the same algorithms, that are now facing backlash as tools of commercial surveillance, attentional exploitation, and bias. Does that change anything?

Critiquing the machine from the inside

“Yeah, this is the kind of stuff that I think about,” says Memo Akten, a Turkish artist, currently based in London.

In 2015, Akten made an image using a Google-developed tool called DeepDream – he ran a satellite image of the UK’s spy headquarters through the algorithms to generate an image of an eye in the middle of a hallucinatory, bubbling grey landscape. The effect is creepy and repulsive; which is to say, it’s a good piece. Algorithms that have been around for decades are suddenly popular, Memo wrote at the time, and that progress “is undeniably fuelled by the multi-billion dollar investments from the purveyors of mass surveillance.”

In the artwork the medium was twisted back on itself; the snake nibbling its own tail. A year later, when Akten was granted a residency with Google’s newly formed Artists + Machine Intelligence program, he used the time to build a Twitter bot which illustrated the gender biases language algorithms learn from our everyday speech.

Art is one of the ways norms can be shifted, Akten says. “A few years ago, maybe this issue of bias wasn't discussed, or even if it was just like, ‘Yeah yeah, that's just the way it is.’ But now it's kind of becoming unacceptable, it's become accepted that it's unacceptable. So this is what I think art can do.”

Memo Akten's "All watched over by machines of loving grace"

| © Memo Akten

Memo Akten's "All watched over by machines of loving grace"

| © Memo Akten

Which art is seen?

Akten, however, also brought up another way art intersects with tech companies; publicity. Programs like Google’s Artists + Machine Intelligence (AMI) could possibly give support to artists “in a way that is controlling the message that is going out to the mainstream,” he says, carefully. It would be a problem, he admits, if Google decided to fund and promote only artists whose work was inoffensive and non-critical.

On a recent Zoom panel hosted by Goethe-Institut, Kristy H.A. Kang, an assistant professor in the School of Art at Nanyang Technological University, said she understood why modern artists are working with tech giants like Google.

“I don’t really hold the opinion that artists should not be working with big tech. I think it really depends.” Kang pointed out that many industries sponsor art, and that it’s possible these engagements can lead to a fruitful convergence between different perspectives. “The only thing you really need to be aware of is, what is the intent of this?” Kang says. “Is this going to be as a way of exploiting the artist’s labour, or whoever, or is this really on equal footing?”

Mike Tyka is an academic and artist and was one of the founders of the AMI project. Speaking from the US west coast, he agreed that if Google was using its money to control the narrative around AI technology, it would be concerning. But, he said, Google simply doesn’t have that kind of dominance over the AI art world. (Akten made a similar point, and noted that he did not encounter any censorship while working on his AMI project.)

And while Tyka has no problems with people making art for purely aesthetic reasons, he says, “if your piece ends up glorifying a technology that has a huge dark side, and you don't acknowledge that dark side, it's reasonable to criticise the artwork as naive or ignorant at best and negligent at worst.”

"Us and them" from Mike Tyka features thousands of tweets from Russian bots

| © Mike Tyka

"Us and them" from Mike Tyka features thousands of tweets from Russian bots

| © Mike Tyka

Enter the complexity

“It's very easy to make these technologies look awesome,” Akten says, “because they are awesome. It's also very easy to make these technologies look terrifying, because they are terrifying as well. And so it is a complicated discussion to be had about what this technology is and where it's going.”

Art can handle complexity. After Brexit and the Trump election, Tyka trained a neural network on hundreds of thousands of tweets from Russian bots, and combined it with another network that generated realistic images of non-existent people. He rigged twenty printers to a gallery ceiling in a circle, and had them print twenty long streams of fabricated tweets from fabricated people, creating what can only be described as a descending hell-circle of apocryphal nonsense and propaganda.

Then, underneath the printers, inside the circle of fallen information, Tyka placed two chairs. “It’s an invitation to sit down and have a real conversation, face to face, with another human,” he says.