An interview with Tega Brain, Simon David Hirsbrunner and Sam Lavigne

Synthetic Messenger

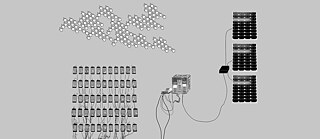

As the climate crisis continues to worsen, many scientists are searching for technologies to help us live more sustainably. But researchers and artists like Tega Brain, Simon David Hirsbrunner, and Sam Lavigne are approaching the problem from a different angle: what if the solution to climate change isn’t technological, but cultural? Their new project, Synthetic Messenger, part of the Goethe Institute’s "New Nature"-series, examines how artificial intelligence can be used shift the narrative on climate change.

By Hannah Thomasy

Artificial intelligence is already shaping people's media experiences.

Tega Brain

The researchers spoke with Massive Science about the Synthetic Messenger project and the intersection of climate change, misinformation, and the media. This conversation has been condensed and edited for clarity.

From a perspective of culture

Hannah Thomasy: Tega Brain, you have a background in environmental engineering. Tell me a little bit about how that informs your work as an artist — how do you address the tensions between technology and the environment?Tega Brain: I trained as a water engineer and worked in that industry for a few years. I felt like a lot of the work I was doing, although it was quite environmentally innovative — you know, as an engineer, you don't really get to ask questions about why certain logics are in play. I felt like I was doing a lot of work to make, you know, big housing developments and questionable developments more palatable.

And so, from that point, I've done a lot of work that questions technologies and engineering as a culture and tries to envisage other ways that we could build technologies. And more recently that's been about data: the way that data-driven decision-making and data-driven systems are having an increasing impact on our lives, both from an environmental perspective — so through like modeling and these sorts of ways of investigating and understanding the world but also more recently I've been doing a lot of work around the internet and the way that media is being transformed by, again, these data-driven logics. I collaborate with Sam a lot and a lot of that work that takes the form of interventions that try to reveal some of this and also provide glimpses of other ways the world could be.

Simon Hirsbrunner, a lot of the work you've done relates to data transparency and trust in science — how does that play into the Synthetic Messenger project?

truths that are hiding in plain sight

And in my media studies I’m focusing more on this representational aspect of scientific work — so, how do scientists represent their work, visualize their work? And I focused on climate change modeling, for example those who are doing climate impact research. They are doing these huge computer models and simulations about climate change in the 21st century, so they have to imagine a whole world with climate change, within the next hundred years. And I found that super interesting because it's not just about temperature increase or something like that, they really have to imagine new worlds, alternative worlds. And that also creates new challenges for trust, and for building trustworthy relationships with members of the public or different publics. I find that that artistic interventions are particularly interesting and needed to do this kind of mediation work from science to society.Sam Lavigne, your work focuses on drawing attention to political forces that shape the different technologies that we use — can you talk a little bit more about that and how you're bringing that to the current project?

Sam Lavigne: I do a lot of work with web scraping, that's one of the main tools that I use when I'm making projects. Web scraping is when you write a program to browse the internet on your behalf. So instead of going to a website and clicking on every single page in it and copying and pasting, I can just write a program that does that for me and then that allows me to make these archives of different websites. It's a really interesting technique, because it helps reveal truths that are hiding in plain sight. There's a kind of notion that in order to understand how power works you might have to gain access to some kind of secret knowledge, but the idea of web scraping as an artistic or critical practice suggests that you can gain a lot of knowledge, not just knowledge in a normal sense but also kind of like poetic knowledge, of how power operates based on what it is itself telling us. So that’s one component of what I do and that's definitely something I'll bring into this project.

I'm really interested in this idea of reverse engineering specific technologies and imagining ways to repurpose them for other ends. So for example, if the police are using automated systems to predict where and when crime is going to happen in the city, and we know that those systems are fundamentally racist, that the police are fundamentally racist, can we build an alternative prediction system that either figures out where police are going to be so people can avoid those areas, or maybe looks for things that are not typically understood to be crime, like financial malfeasance. How can you take the tools that already exist and understand how they work and then alter their functionality to produce different political outcomes?

culture actually becomes engineerable

Much of the research that's gotten a lot of attention lately is looking at technological solutions to climate change, but your group has a really different approach where you're looking at climate change as a sociocultural problem. Can you talk about this approach and why you chose to look at it through that lens?TB: I think that's a massive challenge. A lot of the responses to climate change and now increasingly this emerging discourse around climate engineering, as speculative as that is or not, there’s this underlying desire for us to address the issue without actually having to change our lives at all. And I think the middle class has definitely propagated this. The issue tends to be framed in this very technical way. But if we look at where we are now, we do have to change. We do have to envisage other ways of living and imagine other definitions for what a good life is, that isn't based on these gross narratives that have become so naturalized. This project came about from that point of view: what if we think about this issue as a problem of media, as a problem of narrative? And can we explore and address it from that perspective, rather than the classic engineering perspective which is, “yeah, we'll engineer the world so that we can all just continue on in exactly the same way.”

SH: Of course, you have the natural world, but you also have climate change as a cultural phenomenon, but this actually comes together when everything becomes data and everything is treated as data and culture actually becomes engineerable. So we can engineer culture and that's also one aspect of what I'm interested in getting into with Synthetic Messenger — because climate engineering is not just about manipulating natural systems but it will also be about engineering opinion, you know, which can be done on social networks and so on. And it is actually already engineered — when we look at the ad industry and how the ad industry [prioritizes] some content over others on climate change, creating controversy and so on.

These systems are already mediating so much of our social realities right now. It’s not just Twitter — it’s YouTube's recommendations…also, what search results come back to you on Google. Every platform is using this stuff.

SL: I think one thing that's really important to note here before we even answer the question, the media landscape is very different in America, Australia, and, Germany. Although it's probably not that different in American and Australia because the Murdoch influence is so strong in both of those countries.

Before we had this interview, we were just chatting with Simon about the different ways that climate is covered in the US and in Germany. And the difference is extremely stark. In America, you won't really see climate change mentioned, even in articles that are about climate change. So, if there's an article about the fires in California, which are completely and obviously the result of climate change, you won't necessarily see the phrase “climate change” mentioned in those articles. And I'm not talking about like Fox News, I’m talking about the New York Times.

TB: But also Fox News!

SL: Yes, especially Fox News, but also the New York Times. And of course, people at the New York Times, none of them are climate change deniers, it’s not about denialism. Well, it is about denialism but it's not just about denialism. One of the things that I'm really interested in, and I think is a good way of thinking about problems in media in 2020, is how much of the media landscape is — even when you think that you're more or less politically or ideologically aligned with a particular media organization — they're still being driven fundamentally by market forces in what they what they cover and how they cover it.

You can confirm any belief you have on YouTube

And in a way they're being driven by algorithmic forces also. So, there's a value to every single article that's written, and that value is determined by the number of quote unquote engagements — which could mean people who click on it on social media. But it also means, in a very crude, boring way, it means like how many ads were served from that particular article? Did people click on those ads, or did they not click on them? How much money did that article make? So, it's both engagement — like how many people read it — but it's also like how much wealth did that article produce? And then because of the increasing reliance on data, which pervades everything, certain topics seem to get moved to the forefront while others seem to get further buried. There's a weird way in which, in certain organizations, editorial decisions are being outsourced to automated systems, and just more generally, to material realities, to market forces. This is one of the things that we're going to be directly addressing the project and I think it's something that makes our approach, perhaps a bit novel, in terms of a "geoengineering project".TB: I think it's been well discussed that misinformation has been very strategically deployed and promoted by lobby groups in the US and Australia — I can't speak to Germany. It'd be hilarious if the situation wasn't so dire but there's all sorts of crazy conspiracy theories around like wind energy in Australia, like how it can give kids like learning disorders. These stories where you hear them and you’re like there can’t honestly be people creating media about these things.

I think it's been well established that this has very much held up a lot of action because public opinion has been so split and the media landscape has been so chaotic around this and of course you can confirm any belief you have on YouTube. You'll get someone who's made a video about whatever position you're sort of gravitating towards. So obviously with these sorts of media manipulation and misinformation, the role of media has been really central in how this issue has been addressed. And it's also just such a shame that public opinion is what's driving climate response.

SH: What I see in social media, and also recent social media discussions is that as climate change becomes more and more real, and the information we have about it becomes much more concrete, the controversy doesn't stop, but it becomes much more related to people's lives. So, for example, when we have all these risk assessments about sea level rise, and increase of flooding in coastal areas, and we see maps, for example, interactive maps online of sea level rise induced by climate change, that actually doesn't reduce the controversy about it, but it just makes controversy much more connected to people's daily lives. And that's something that the media has to reflect on much more, that it's actually connected to very personal stories of people and people have their own way of dealing with this information. They have to find new ways of dealing with this kind of information, it's not that easy. It's not just about believing or not believing. It doesn't save you if you believe in it, you know?

A lot of forces at play

In this project, tell me a little bit about how you envision how artificial intelligence could be used to shape people's media experiences in relation to climate change?TB: Artificial intelligence is already shaping people's media experiences. I think that's a given. I mean, we often pine for the days of just having a chronological timeline.

SL: In the US, there is a lot of concern about misinformation during the election. And one of the things that Twitter is doing is they're turning off their machine-learning based recommendation engine for what order you see the tweets in. Well, they're not turning it off all the way but they're dialing it down a little, as a weird admission on their part that the AI that they're using to order the timeline and show you different tweets has been a problem this whole time.

So, the way that it works now is something to the effect of: their system tries to predict — they want you to stay on their website as long as possible — their system is trying to predict what tweets could you show this person, so that they will remain here for as long as humanly possible?

TB: And stay in that state of anxiety that keeps them scrolling.

SL: And so, intentionally or not, it ends up, promoting content that is radicalizing or that is filled with conspiracy theories.

TB: Or that’s just hyper-emotional, you know, people having sort of rant-y outbreaks on Twitter — that content really gets a lot of engagement.

SL: These systems are already mediating so much of our social realities right now. It’s not just Twitter — it’s YouTube's recommendations…

TB: Also, what search results come back to you on Google. Every platform is using this stuff.

There's obviously been a huge amount of interest in AI in the media art space over the last few years in a way that still surprises me, because, you know, it's statistics. And sometimes I'm like why is there this fascination in the arts about this and I think it's for many reasons. I think it does distribute agency in new ways, and obviously paying attention to different agencies in the world is something that has a long history in artistic work. There's this question that keeps coming up, which is: what about projects that deal with ecology and AI? And if you look at the energy expenditure in training models, it’s ridiculous — there's a contradiction there, the impact of these technologies. I think the media arts can be quite guilty of desiring techno solutions or wanting to engage with the latest technologies, as a way of staying relevant, but potentially also diverting resources from — I mean this is also a US-based perspective — from the tech sector into the arts. They have these sorts of projects because there isn't a lot of public art funding or independent art funding. So, I think there's a lot of forces at play.

Of course, we can use AI to make our systems more efficient or the grid more efficient and whatnot, you’re still going to have Jevons paradox at play, which is in a market-based system that means the price of these things goes down which actually doesn't necessarily give you a net benefit in terms of resource use. So, for the efficiency argument with AI, one has to contend with that. But I think in terms of daily experience, that's a fascinating and very rich place where we can really probe and explore how a lot of our perceptions of environment and ecology and risk and where we are at with the climate issues and so forth, come mediated through these data-driven and AI systems. As artists, we do have this freedom to kind of prod at that, and try to understand it through reverse engineering or doing sort of intervention-type work.