Productive Errors

AI Errors in Art

In art, an error is rarely fatal. Because an error is generally more productive than counter-intuitive, it is often met with a certain recognition, the kind that is usually reserved for the admiration of a new idea, or the revelation of something hitherto unthought of.

Errors belong to the category of surprise, the unexpected. When an error happens, it is immediately identified as a result that does not meet the expectations, or at least is not located within the spectrum allocated to the expected results. Therefore, it implies a realm of predictability from which the infinite, chance-based variables are banned. An error informs us at the same time about what is wished for and what is rejected; it helps us to circumscribe its contours and to identify unwanted elements. An error is a concave indicator. When it is paired with Artificial Intelligence (AI), it acquires the tint of a relative threat. AI, which is essentially conceived as a set of algorithms, is increasingly used in various areas of daily life. Whether it’s Alexa, who has already well settled into our homes, or automated systems of personnel management, or self-driving vehicles that Uber would like to launch on the market, it is clear that AI has already established itself in our midst. And when it commits an error, it can range from almost harmless indiscretion – though this kind of thing can also take a dramatic turn – to more serious discrimination in hiring processes to a deadly collision where a body is interpreted as being a bag. In that instance, death becomes an irreversible error.

Errors are like crabs

In art, an error is rarely fatal, although some performance works could defy this assertion. And because an error is generally more productive than counter-intuitive, it is often met with a certain recognition, the one that is usually reserved for the admiration of a new idea, or the revelation of something hitherto unthought of. Walking sideways, the crab is an error in itself and sometimes, even often, it is a precious vehicle of reflection that can evolve into a method on its own. That being said, when art integrates AI, two types of errors can occur: errors that emphasise the incompetence of the underlying AI; and errors that aren’t errors because they are part of a learning process. We encounter the latter with the indulgence of an adult towards a child. Those two types of errors may be confused at any time. In fact, whatever AI does, it can only create uncertainty, at least for the moment.Algorithmic myopia

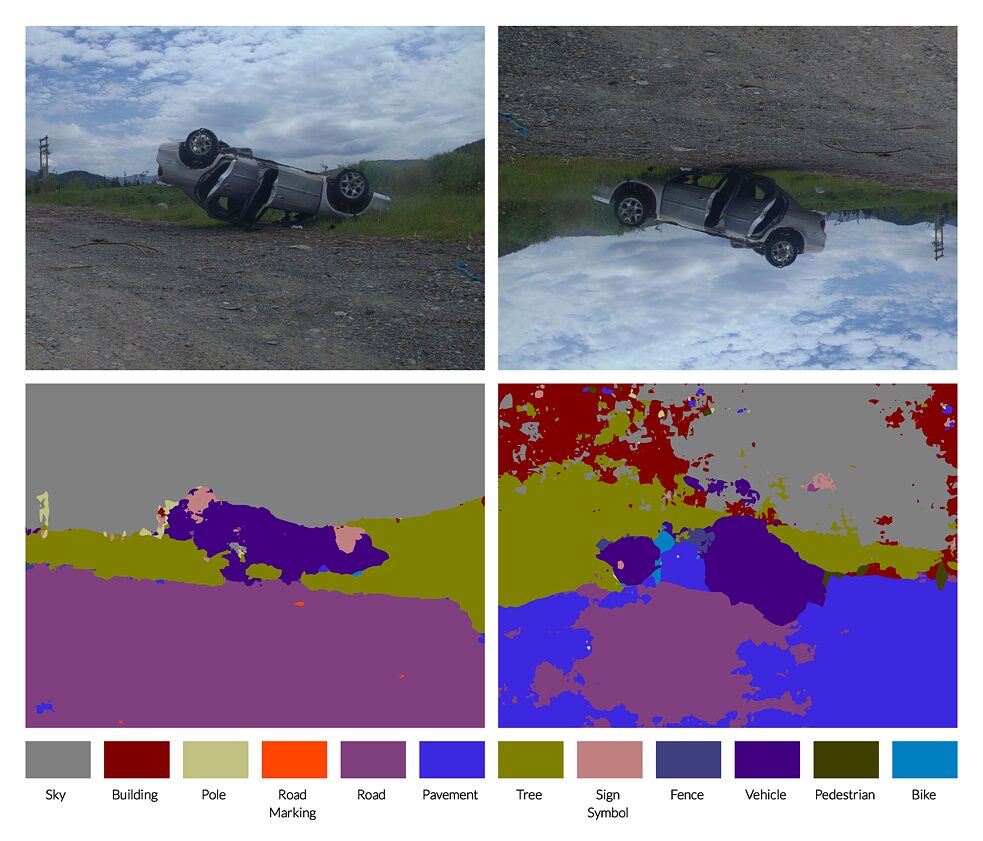

In recent years, the Montreal-based artist François Quévillon has dedicated a lot of his work to the topic of machine automation, in particular self-driving vehicles. As we know, in terms of failures, they exhibit excellent quotas. This is the lot of any learning process: the dual principle of trial and error, and this “package” constitutes its material of choice. From the perspective of art, an error is rather something that allows us to appreciate a viewpoint, than being something “wrong” that needs to be corrected. In art, the error is not an objective fact. Based on this assumption, we can freely interpret it on the scale of inaccuracy, to the point of using an error as a means of restoring the initial state of things. Driverless Car Afterlife by François Quévillon, a digital sequence from 2017, plays entirely on this premise: the erroneous result that AI offers us, incapable of correctly interpreting what it sees. Driverless Car Afterlife, 2017. Digital print on archival paper. 90 x 77 x 3 cm.

| Courtesy of the artist © François Quévillon.

The image of an overturned car becomes the vector of a visual perspective that renders the subject abstract, yet it takes it at face value in the title of the work. The factual aspect of the overturned car is unequivocal. Meanwhile the image becomes something else. In the eyes of AI, there is a sidewalk, bike, and fence; buildings that fight over the sky, signs that float in the middle of nowhere. What AI sees is some sort of the unconscious that escapes us, an algorithmic surrealism. In the original image, the wheels are road signs that only the “eye” of AI are able to see, emphasising simultaneously the highly rational and “accident”-free location that is the concept of reality from AI’s point of view, or, it should be specified, the formula of a “reality concept” that represents more an image than a reality.

Driverless Car Afterlife, 2017. Digital print on archival paper. 90 x 77 x 3 cm.

| Courtesy of the artist © François Quévillon.

The image of an overturned car becomes the vector of a visual perspective that renders the subject abstract, yet it takes it at face value in the title of the work. The factual aspect of the overturned car is unequivocal. Meanwhile the image becomes something else. In the eyes of AI, there is a sidewalk, bike, and fence; buildings that fight over the sky, signs that float in the middle of nowhere. What AI sees is some sort of the unconscious that escapes us, an algorithmic surrealism. In the original image, the wheels are road signs that only the “eye” of AI are able to see, emphasising simultaneously the highly rational and “accident”-free location that is the concept of reality from AI’s point of view, or, it should be specified, the formula of a “reality concept” that represents more an image than a reality.

Is it really an error?

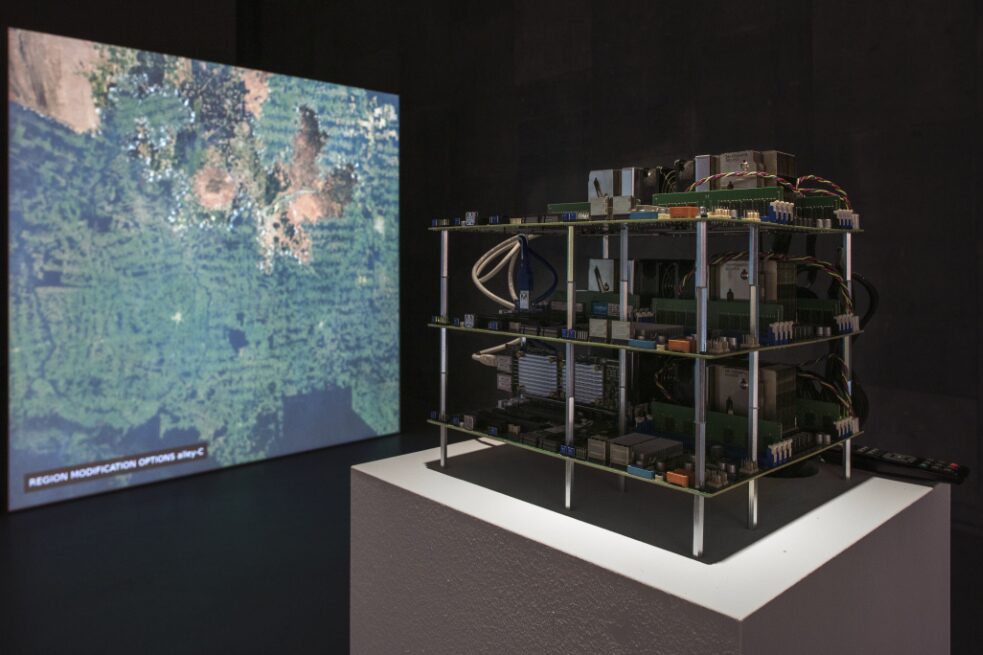

When reality is interpreted by algorithms, it is usually done in an extremely cold manner, which gives an error the appearance of authoritative affirmation although there is nothing intentional in the AI’s assertions. The installation Asunder (2019) by the artists Tega Brain, Julian Oliver, and Bengt Sjölén, which was presented at the last Transmediale festival for art and digital culture in Berlin, is an AI system using very powerful custom computers that process an enormous amount of data in real time in order to suggest solutions for different environmental problems. More specifically, a geographical region is described and analysed, and its problems are identified, then solutions are suggested.These “solutions” aren’t necessarily realistic, and for the most part, they’re downright impossible. When AI suggests relocating a lake or an entire town or city, does it commit an error, or is it just applying a relentless and cold logic? Maybe we should re-evaluate the criteria on which we base our decisions, and maybe we should consider a certain utopian vision in order to design a viable future, even if this vision would be perceived as an error from our current position. Today’s error might eventually be tomorrow’s solution.