Democracy in the Digital Space

“Be Careful What You Build”

Human rights lawyer and author Maureen Webb explains how “technical fixes” can exacerbate bias and discrimination, and how hackers are fighting to protect our democratic rights in the digital space.

By Johannes Zeller

In your book “Coding Democracy”, you describe hackers as productive disrupters against surveillance, concentrations of power and authoritarianism. You also argue that hackers can help us resolve the “information dysphoria” that currently plagues our societies. What is information dysphoria?

Information dysphoria describes the general malaise we’re facing right now in the West, the state of unease and disorientation we feel in the current digital environment where our information and social relations are increasingly being shaped by undetectable forces. It’s a more neutral term than fake news, misinformation, disinformation, bias, or discrimination, although it includes all these problems. In pathology, “dysphoria” describes a state characterized by anxiety, depression and – I think this is very pertinent to our present political situation – “impatience under affliction.”

What are some of these “undetectable forces” determining our reality?

There are many examples: algorithms that make opaque decisions and are socially sorting us all the time; big data with its many unexamined assumptions, taking over more and more governance functions; narrowcasting, a business model wherein media target ever smaller fragments of the population; political microtargeting, as we have seen it in the scandals surrounding Cambridge Analytica in the 2016 US presidential elections and Brexit vote; influence campaigns that deceive people as to their origin; viral disinformation campaigns; the rising use of deepfakes… to name just a few.

To what extent is information dysphoria capitalised as a political tactic?

Democracy depends on deliberative, fact-based discourse. Inducing a state of information dysphoria in a population is an old tactic of authoritarian regimes and one we have seen over the last number of years creeping into Western democracies, intensified through digital media. You fill the public sphere with things that aren’t true, and you contradict yourself all the time. Then nobody knows what is true is anymore, and you end up having a strong position in the manufacture of the symbols of the day. Symbols bypass debate and deliberation because they appeal directly to people’s emotions, to their grievances, their values and mythologies of loss and redemption. Information dysphoria is also used politically to justify mass surveillance and censorship. Impatient to “fix” the information environment, governments and elites start working closely with monopoly platforms to control what a population can hear, think, or say. This also destroys deliberative democracy.

Is information dysphoria ever used by the less powerful?

I suppose you might say that inducing a state of information dysphoria in a ruling elite is a tactic of political resistance by the less powerful. In a series of blog posts he wrote before he started WikiLeaks, Julian Assange explained his theory that leaking, especially mass leaking, is not emancipatory only because it exposes secrets the governed need to know, but also because it strangles the communication between the powerful and disorients them.

Hacker groups like the German Chaos Computer Club (CCC) have been working against surveillance and for transparency for some time, haven’t they?

Yes, and they’ve employed another tactic of disorientation brilliantly: humour! For example, in the early days of the “war on terror”, when governments were pushing the idea of biometric identity papers. The CCC stole the fingerprints of the German minister of the interior at the time, Wolfgang Schäuble, and published them in a magazine, printed on plastic film that readers could use to impersonate the Minister when walking through security checkpoints. It was a very humorous way of illustrating the vulnerability of such a system and influenced the way the public felt about these systems – and about the minister.

How are bias and discrimination inscribed into digital technologies?

Let’s take the example that’s often discussed, that is the algorithms all around us that decide who gets a loan, who gets a job interview, who gets insurance and how much they pay… They don’t automatically make things fair. A lot of bias and discrimination gets built into them which goes unexamined. In the analogue world, we have developed all kinds of analyses and disciplines for ferreting out and addressing bias. But in the digital world, these often get lost in translation. The code itself is often a “black box”, and few people understand what is going on inside that box. With machine learning, even the developers may not be able to say.

Can you give us an example how this digital bias affects decision-making and people’s lives?

At one point Amazon used algorithms to hire software engineers and they found that their mechanism was only flagging the resumes of men. The reason for this was that they had used the resumes of employees they’d already hired to determine the criteria for hiring. So, the bias was in the historical data and got reproduced by the algorithm.

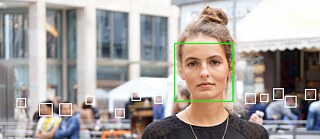

Could the same happen with technology that is used by governments, for example facial recognition software?

A study of two major facial recognition programs found they failed to identify gender one percent of the time when the subject was white male and 35 percent of the time when the subject was a darker skinned female. That’s because the programmers trained their algorithms on datasets of people who mostly looked like themselves. Examples like this are worrying when one considers our criminal justice and other enforcement systems are increasingly relying on algorithmic decision-making. Note too that in fully automated enforcement systems there is no appeal, no human judgment, no mercy or discretion.

Why does the use of biased and discriminatory technology not meet more public resistance?

It’s hidden and not understood, but there are other things going on too. People are impatient, they want “silver bullets” and too readily act on illiberal impulses. That includes everyone. We must be courageous and view these phenomena in a non-partisan way. I think it is extremely important for progressives to recognise that they have biases too. Censorship by search algorithms has become the solution of choice for countering opinions, questions, and debates that progressives don’t like. I speak as a person who comes from the Left, I am a trade unionist and a human rights lawyer. We are also inscribing our biases into various technological processes. There are situations when we might think we’re doing it for good causes, but we are actually doing more harm than good.

Such as?

For example, there was a Hollywood app that intended to battle discrimination against Blacks in the film industry. It started as a simple registry of Black people who work in that industry. The next step the developers had planned was providing the studios with demographic break downs on the racial identities of their workers for each production. In a talk at the Goethe-Institut in San Francisco, I deconstructed what a bad idea it might be to do that with a legal analysis of the relevant human rights and labour law.

What was the problem with this Hollywood app?

I’m completely on board with increasing the participation of minorities in the workplace at all levels. But I had to ask: If you’re going to provide a demographic breakdown of workers’ identities, what categories would you include? Not just race and gender, but also family, religion, socio-economic status? Human rights legislation protects these and more. Are you aiming for proportional representation, and how is that even attainable given that everyone has multiple, intersectional identities that add up to far more than 100 per cent? Also, workers have a right to privacy. There might be all kinds of reasons why they don’t want to report their “identities” to their employer, including the practical fear they’ll be passed over if studios know they have a disability or criminal record. So then there’s the question: What about those who don’t want to participate? Do you really want to force every worker to carry an identity report card, a “race card”, just because our information society is capable of building a system like that? What starts out as a good, progressive idea can quickly become a bad idea with unintended consequences when you build it out as a “technical fix”.

Progressive governments have just employed this kind of “technical fix” in form of the vaccination passport. Could there be unintended consequences here too?

This is another important example where progressives must check their own biases. In a breath-takingly short time, progressive Western governments, confident of their own virtue and superior intelligence, have got broad swathes of their populations to accept a mass surveillance and social credit scheme along the Chinese model. If these governments had a legitimate public health objective, they could have used temporary, single-purpose cards with anti-forgery protection, like we have in our money, driver’s licenses, and health cards. Instead, they chose to use a cell-phone app with personal and health information embedded in a QR code. They built a system, unprecedented in the West, that is very likely to expand both in its functionality and its permanence. My argument is that digital social-sorting tools are dangerous in anyone’s hands. They are instruments of social control with machine logic not humanist sensibilities, which have consequences far beyond what their developers can usually imagine. My urgent comment is: Be careful what you build! As a society, we have to be careful what we permit to be built.

Digital social-sorting tools are dangerous in anyone’s hands. They are instruments of social control with machine logic not humanist sensibilities, which have consequences far beyond what their developers can usually imagine.

In your book you argue that hackers are the ones offering some of the best solutions to the democratic challenges we just talked about.

I think hackers, more than other groups, are on the right track. The reason I say this is because I don’t think that narrow, technical fixes will get us out of this mess. What hackers have been fighting for over the last three decades are really principles of governance and ethics in the digital era. Progressive hackers are all about distributed power, distributed decision making, and distributed democracy. They’re about open access to the internet, decentralisation, and commons-based solutions. Their main tenet, known as “the hands-on imperative”, is that access to anything that might teach you how the world works should be unlimited. This means that everyone should have the freedom to study, interrogate and improve systems, especially computer systems.

Could we say that hackers are inherently sceptical of all systems because they know that all systems can be abused?

Yes, I think that’s a very penetrating observation.

Returning to the hacker tenets you listed earlier, these seem to be grounded in the “hacker ethic” outlined by Steven Levy almost forty years ago – in 1984, ironically.

Yes, there’s a whole genealogy of hacker ideas that stretches back to the earliest hackers in the 1950s, which Levy summarized in the 1980s, and which contemporary hackers continue to develop. Today, the “hacker ethic” encompasses the principles of privacy and data self-determination, transparency, net neutrality, free software, and the “hands-on imperative” – all these will be key to preserving our democracies in the digital age and to mitigating our information dysphoria.

The contemporary hacker slogan that many of our readers might have heard is, “privacy for the weak, transparency for the powerful.” Why is privacy for the weak such an important issue for hackers?

Privacy guarantees autonomy and security in one’s personal sphere. If you want to protect users from microtargeting and disinformation campaigns, you have to guarantee them online privacy and control over their own personal data, otherwise they’re going to be exploited. Hackers have been working for years to create civilian encryption tools and to secure data self-determination.

And what about transparency for the powerful?

Transparency really is the only antidote to misguided or corrupt governments. WikiLeaks has ushered in an era of unprecedented transparency for the powerful. They’ve been followed by many other leaking platforms, including those used now by most mainstream media organisations.

What about the other hacker principles?

Net neutrality was built into the original internet, which was a decentralized network that did not monitor or discriminate against users and content. Everyone could use it freely for their own purposes: There were no gate keepers. What people have to understand is that the hackers’ fight for net neutrality is a fight for free communication in the digital age.

You also mentioned “free software”.

Free software does not have to be “free” as in “free of charge” – but it must be free to be scrutinised, modified, built upon, and shared without restriction. There should be no “black boxes”. The idea that software should be in the control of its users is a bedrock of freedom and democracy in the digital age.

The idea that software should be in the control of its users is a bedrock of freedom and democracy in the digital age.

During the research for your book, you stayed at the Chaos Communication Camp organised by the CCC in Berlin, and you also visited hacker groups in Spain and Italy. Are there any striking differences between the European scene and the scene across the Atlantic?

I had the pleasure of meeting hackers from many different countries and there was a striking difference among the countries, but particularly between Europeans and Americans. And I say this even though there is an increasing internationalisation of the hacker world. I found the European scene – particularly the German hackers – to be both more irreverent and more serious than the American hacker scene. An irreverent yet politically serious act like the publication of a minister’s fingerprints would likely not be done by American groups – and the joke would not have landed in America the way it landed in Germany either.

What’s different about the American hacker scene?

The American scene today is heavily commercialised. Many hackers have been hired into the security and military complex, big tech, and even the Democratic party. Hacker conferences like Defcon and Black Hat are very commercially oriented. In 2013, a member of the Department of Homeland Security Advisory Committee delivered a keynote at Defcon. A lot of people in the US who would describe themselves as hackers do not care as much about the societal impact of their technology.

Is the European scene more democratically and socially aware?

This is very much the case today in Spain, France, and Italy. Of course, German hackers and the CCC have always been at the cutting edge of discussions about technology and society. And they’re making a lot of progress. Like Nelson Mandela’s African National Congress, the CCC’s reputation has morphed over time from suspected criminality to respectability. Today they’re a valued group regularly consulted by the German and European parliaments.

What’s the future of the hacker movement?

The progressive hacker scene is becoming a growing international movement. It is increasingly connected and working with citizen groups that are aware of all the issues we have talked about. They try to use hacker skills and technological understanding to remedy the problems that we face. The democratic challenge now is to preserve Western liberal principles while achieving broad consensus. This might be possible with the models of distributed democracy and decision-making advocated by some hacker groups.