Images and Bias

What Do Normal People Look Like?

What is normal? And what constitutes a normal face? Our brains are constantly analyzing and classifying every face we encounter, and we as people are not alone in this. A whole field of science and technology analyzes these sub-attentive cognitive processes and breaks them down into statistical normalities. Through machine learning, facial recognition is even being used to categorize and predict human behavior.

By Mushon Zer-Aviv

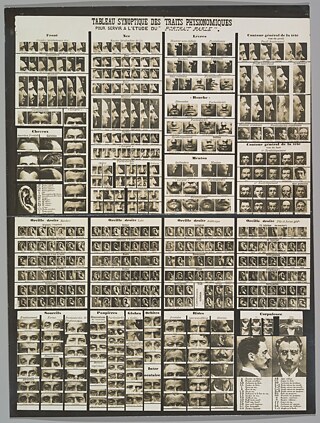

The Speaking Portrait

Paris changed dramatically during the 19th century, as the Industrial Revolution attracted villagers to try their luck in the big city, starting an urbanization trend that continues to this day. For the first time in their lives, both native Parisians and the new kids on the block found themselves surrounded by new, unfamiliar faces every single day. These demographic changes, together with the rising divide between the industrialist and the worker classes, slowly chipped away at the city’s social fabric. The rising alienation between residents worsened the anxiety in the streets and contributed to a spike in crime.

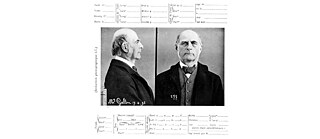

For Bertillon it was nothing more than an indexing system to aid in identification. And indeed the “Bertillonage” spread quickly through Europe and the US as the cutting edge scientific best practice for photographic police identification. But just a few years later, much to Bertillon’s disappointment, it was replaced by the fingerprint, which proved to be a much simpler and more accurate identification technology.

What Is Normal?

The study of the fingerprint was among the many scientific contributions of Sir Francis Galton, a prolific British intellectual and a statistics pioneer. Another one of Galton’s major scientific discoveries was that of statistical normality, or normal distribution. It observed that what may seem to be random phenomena often revealed a pattern of probability distributed around a curved peak shaped like a bell – “the bell curve”. These phenomena would mostly coalesce closer to the peak of the bell curve rather than towards the edges.

To demonstrate this fairly abstract statistical phenomenon, Galton built a peculiar device that somewhat resembled a pinball machine and filled it with beans. At the top of the board, the beans were funneled into a single outlet at the center of the board from which they dropped down into an array of pins. The beans bounced around the pins on their way down and finally landed in slots equally distributed along the board’s base. While there is no way to exactly predict which bean would fall into each slot, the overall distribution always repeated the bell curve shape where the center slot contained the most beans and those to its left and right would gradually collect fewer beans.

This handy mathematical formulation helped statistical normality become much more than a scientific standard. Galton aspired to use the lens of statistics for every aspect of life, and normality has quickly expanded beyond the context of the natural sciences. Prior to Galton’s work at the end of the 19th century, it was very abnormal to use the word normal to describe anything outside of the realm of the natural sciences. Following Galton’s findings, the concept of normality was itself quickly normalized, and through the work of Émile Durkheim and other sociologists it has permeated the social sciences and culture at large.

Normal Face Or Deviant Face?

In 1893, Galton visited Bertillon’s forensic lab and became a great fan of the Bertillonage. He was not interested in what Bertillon’s tools and methodologies might reveal about individuals’ responsibility for past crimes; Galton was interested in statistically predicting future deviations.

Bertillon himself never meant for his technology to be used in this way. When confronted with the scientific racism theories of contemporary Italian criminologist, Cesare Lombroso, Bertillon said: “No; I do not feel convinced that it is the lack of symmetry in the visage, or the size of the orbit, or the shape of the jaw, which make a man an evil-doer.”

Bertillon argued that he saw a very wide distribution of faces go through his forensics lab. And that an eye defect, for example, did not indicate the person was born a criminal, but that his poor eyesight may have left him few alternatives on the job market.

Bertillon was datafying the body to identify and correlate past behavior, not to project and predict future acts. Yet, the same datafication and classification of behavior and personal traits allowed for both forensic identification and statistical prediction. Scientific racism and physiognomy went on to shape the 20th century. In his book Mein Kampf, Adolf Hitler refers to American eugenicists as a major source of inspiration for what Germany later developed into Nazi eugenics. The Nazis pushed this philosophy and practice to its horrific extreme with the mass exterminations and genocide of Jews, Romani, homosexuals, disabled people, and others marked as deviations from the normalized racist Aryan image. After the Nazi defeat in World War II, the ideas of physiognomy and scientific racism received international condemnation. In the following years they lay dormant underneath the surface, but they did not evaporate, for they were deeply embedded in the heritage of statistical normality.

The Portrait Speaks Again

Facial analysis made a dramatic comeback in the second decade of the 21st century powered by the latest advancements in computer-enhanced statistics. Today’s “data scientists” are the direct intellectual descendants of the 19th century statistics and datafication pioneers. Some of them focus on identification, updating Bertillon’s attempts to forensically identify individuals by correlating data with past documentation. Others, like Galton, focus on analysis, attempting to statistically project future behavior based on patterns from the past. Today both identification and projection are often used in combination in an attempt to exploit the technology to the fullest extent. For example, in November 2021 after pressure from regulators, Facebook announced it would stop using facial recognition to identify users in pictures and said it would implement its “Automatic Alt Text” service that analyzes images to automatically generate a text description of content.

Data centers are accumulating more and more data. They create an ever more detailed portrait of our past behavior that feeds the algorithmic predictions of our future behavior. Much like Galton’s composite portraiture, the image of normal behavior is constructed from multiple superimposed samples of different subjects. Every bean of data is funneled through algorithmic black boxes to find its way into the normally distributed slots along the bell curve of future normalized behavior. Normalization through data becomes a self-fulfilling prophecy. It not only predicts the future; it dictates the future. When the predicted path is the safest bet, betting on deviation becomes both a financial, a cultural, and sometimes a political risk. This is how data-driven predictions normalize the past and prevent change. Machine learning algorithms are therefore conservative by design, as they can only predict how patterns from the past will repeat themselves. They cannot predict how these patterns may evolve.

The discourse on online privacy still informs the discussion around a person’s right to keep their past activities private. But today’s algorithmic surveillance apparatuses are not necessarily interested in our individual past activities. They are interested in the image revealed by our composite portraits – the one that defines the path of normality and paints deviations from the norm as suspicious.

Online Normalizi.ng

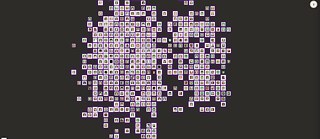

Like machines, as people we are also constantly categorizing the faces of others. We capture, classify and analyze each other’s physiognomic features. But are we aware of our daily biases, of the way we constantly define what is normal and what is not? How do we categorize people and how are we categorized? That’s what the Normalizi.ng project is all about. It is an experimental online research project using machine-learning that aims to analyze and understand how we decide who looks more “normal”.

Participants in the Normalizi.ng web experience go through 3 steps that are common to both early and contemporary statistics. In the first step – capture – they are encouraged to insert their faces into a frame and capture a selfie. In the second step – classify – they are presented with a series of previously recorded participants. They then swipe right and left to classify which of a pair of noses, mouths, eyes, and faces looks more “normal”. In the third step – analyze – the algorithm analyzes their faces and their normalization swipes. It files them in a Bertillonage inspired arrest card with dynamic normalization scores and then adds their faces to a Portrait Parlé inspired algorithmic map of normality.

Unfamiliar faces are a major part of what makes Paris and so many other big cities so exciting, so full of possibilities. Paris will never be a small homogeneous town again. Neither will the internet. The algorithm is the cop who sees you only through your stereotype, never really knowing you as a person, never talking to your mother. Let us stop and ask ourselves, is that something we want to systematize? To automate? To amplify? And anyway, is normality even something we should be policing?

The panel (Mis)Reading Human Emotions – with Mushon Zer-Aviv – at the festival When Machines Dream the Future