Digitalism as a cultural technique: From alphanumerics to AI

Artificial intelligence is undoubtedly already influencing our traditional cultural techniques. But have we reached the point where the use of AI itself could be described as a cultural technique? One thing is certain: We have long utilised digital systems in our daily lives despite not having the slightest idea how they work.

By Sybille Krämer

Thanks to the alphabet and decimal numbers, the ‘three Rs’ of writing, reading and arithmetic advanced to the level of ‘cultural techniques’ in Europe’s rising merchant capitalism. For the first time, arithmetic could be performed purely in writing, without an abacus or counting frame. Elementary arithmetic was thus deconsecrated; it became a skill that was no longer the preserve of talented mathematicians and could be learnt at school. If addition, subtraction, multiplication and division are learnt by heart, arithmetic was nothing more than the manipulation of symbols on paper – it is no longer necessary to comprehend that complex numerical operations are involved.

Correct calculations could be made without ever interpreting the meaning of ‘0’; that is why ‘nothing’ can be quantified and treated as a number. Recognising the meaning of a mathematical symbol means knowing which operation to carry out, or how to transform a pattern according to fixed rules. The symbolic and the technical was intertwined, which is the essence of all cultural techniques. Truth was replaced by correctness.

Being able to do something without having to understand how and why it works is a common characteristic of the use of any technical device.

With the advent of written calculations, the symbolic machine of formal arithmetic operations was born. Any kind of intellectual work that could be turned into a formal system could be carried out in a mechanical and algorithmic way: whether by humans or machines. The philosopher and mathematician Alfred North Whitehead noted that civilisations advance by expanding the number of operations that can be performed without having to think about them.

The use of symbolic machines for formal arithmetic operations was associated with control and transparency – there was no scope left for magic or mysterious tricks. Once the solving of equations incorporated letters and became algebra, algebraic rules (for example (a+b)² = a²+2ab+b²) could be written down in a universally valid way for the first time. Algebra, formerly an Ars Magna et Occulta, became a form of mathematics that could be taught and learnt.

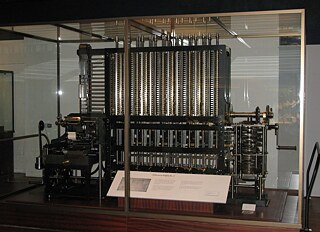

150 years later, Ada Lovelace (1815-1852), daughter of the eccentric poet Lord Byron and his wife Anne Isabella Noel-Byron, realised that machines could potentially calculate using not only numbers, but also symbols (in the Leibnizian sense) – symbols that could represent music, language or numbers. She developed the first executable program for a universal machine, even though such a machine – designed by Charles Babbage – only existed on paper at the time. Her program for the automatic calculation of Bernoulli numbers was clearly arranged in tabular form for human hands and eyes.

Yet for the machine – according to Lovelace and Babbage’s ideas – it was sufficient to simply enter the binarized starting values and instructions in the form of a punch card pattern (a technique similar to that used to create complex patterns on a Jacquard loom). This was the first time that the separability of hardware and software was recognised and put into practice. Ada Lovelace was interested in both mathematics and music and expressed the hope that such machines could one day also be used to compose music.

With the programmability of computers, it seemed that humankind had reached the highest level of agency and control: Everything that could be described in a formal configuration of symbols could be carried out by computers. And given that intellectual work is (to a large extent) so deeply rooted in the cultural technique of organising formal symbols, this seemed to lay the foundation for machines that can think for us – either bit by bit or entirely. The artificial intelligence project started to take shape, emerging from the cultural techniques of formal symbol manipulation.

But doesn’t the cultural-technical anchoring of intellectual work obscure one of its important modalities? The psychologist and Nobel Prize winner Daniel Kahnemann (*1934) made a distinction between ‘slow’ and ‘fast’ thinking. ‘Slow’ refers to laborious, time-consuming, symbol-processing thought processes; the kind of thinking we use to debate, write or calculate. Such thought processes are undoubtedly shaped by cultural-technical aspects. But when we recognise voices, faces or even objects, this typically occurs at a speed that is instantaneous, automatic and almost instinctive, even though we are unable to explicitly state what it is that distinguishes our neighbour’s voice and face from the thousand other faces and voices we perceive.

Indeed, machine modelling of this perception-based ‘fast thinking’ has played a role in the artificial intelligence project ever since it started. Since the invention of Warren McCulloch and Walter Pitt’s neuron model (1943) and Frank Rosenblatt’s perceptron algorithm (1958), attempts have been made to do away with explicit programming and rely instead on machine learning. And these attempts were not inspired by the cultural technique of written computation, but rather the basic mechanisms of networked neural activities in the brain. The action potential of a synapse is the result of inhibiting and reinforcing factors that change the weighting of its connections. According to the basic idea, this is how artificial neural networks can learn to classify different kinds of inputs with regard to a desired output.

The reason for this is not the neural networks themselves, which we’ve known about since the 1950s, but rather the immense amounts of training data made available through users’ online activities or produced by armies of paid clickworkers, combined with the vastly increased computing power of today’s processors. This has made datafication possible, the continuous translation of real-world conditions into a stream of data. If the world had not metamorphosised into a machine-readable data universe, companies such as those developing ‘self-driving cars’ would be untenable. Even so, a child needs only three example images to learn how to distinguish between a cat and a dog; deep learning with seven hidden intermediate layers requires a million examples. And the cat detection algorithm still doesn’t know that cats are not flat! This extreme data consumption can place limits on deep learning. Another aspect is even more problematic. Even if we analyse the algorithm’s output, it is impossible to work out the internal cat detection model that the algorithm has developed based on the millions of training files. The trained algorithm embodies a “knowing how” that cannot be reconstructed as a “knowing that” which humans can understand. The consequence is “black boxing”. The more successful a trained algorithm is, the more invisible its internal machine model.

Remember: To algorithmise a human activity means making it possible for a machine to explicitly and intersubjectively comprehend how that activity is to be carried out as a routine. However, as the AI learns, the routinisation of its procedures (e.g. smartphone camera settings, traffic jam reports on navigation systems, or the page rankings of search results) leads to another tendency: The efficiency of these data technologies swallows up the transparency and makes it impossible for us to know how they do what they do.

The two-faced nature of technology not only means that it can be used to achieve both good and bad objectives. It also means that in relation to using technology, knowledge and ignorance are two sides of the same coin. Because for technology users, being able to use a device and knowing how it works are two very different things. It may be possible to modify this tension, but it cannot be completely eliminated.

If we call artificial intelligence a cultural technique 4.0, this raises the question of whether we should regard it as an ‘ordinary technology’ within this tension of knowledge/ignorance. One thing is certain: Even if we classify AI in this way, we still have to assume it will have radical consequences for culture and society, similar to the effects of the steam engine at the start of the Industrial Revolution.

This article is part of Kulturtechniken 4.0, a web project from Goethe-Institut in Australia which looks at the interplay between artificial intelligence and traditional cultural skills.