Understanding Neural Machine Translation

How Artificial Intelligence "Works" in Literary Translation

When Google Translate was first launched in 2006, it could only translate two languages. By 2016, it was supporting over 103 languages and translating over 100 billion words a day. Now, not only does it translate, but it can also transcribe eight of the most widely spoken languages in real time. Machines are learning, and they are learning fast.

There are still some linguistic codes that machines have yet to crack. Artificial intelligence continues to struggle with the vast complexity of the human language – and nowhere is language more complex and meaningful than in literature. The beauty of words in novels, poems and plays often lies in the nuance and subtleties. But, because machines translate on a word by word basis with rules set by linguists, traditional machine translation systems have failed to capture the meaning behind literary texts. They have struggled to understand the importance of context within the sentence, paragraph and page within which the word resides.

However, there is a new technology that is learning to make sense of this contextual chaos – Neural Machine Translation (NMT). Although still in its infancy, NMT has already proven that its systems will in time learn how to tackle the complexities of literary translations. NMT marks the beginning of a new era of artificial intelligence. No longer do machines play by the rules written by linguists – instead they are making their own rules, and have even created their own language.

Neural Machine Translation (NMT)

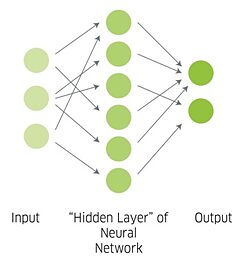

Launched in 2016, NMT is the most successful machine translation software to date. Not only does it boast an impressive 60% reduction in translation errors compared to its predecessor, Statistical Machine Translation (SMT), but it also translates much faster.These advancements are due to the system’s artificial neural network. Said to be based on the model of neurons in the human brain, the network allows the system to make important contextual connections between words and phrases. It can make these connections because it is trained to learn language rules, by scanning millions of examples of sentences from its database to identify common features. The machine then uses these rules to make statistical models, which help it to learn how a sentence should be constructed.

The Artificial Neural Network. A source sentence enters the network, is then sent to different hidden network “layers”, before finally being sent back out in the target language.

| Alana Cullen | CC-BY-SA

The Artificial Neural Network. A source sentence enters the network, is then sent to different hidden network “layers”, before finally being sent back out in the target language.

| Alana Cullen | CC-BY-SA

An Artificial Language

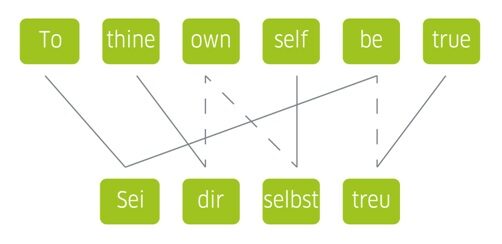

A breakthrough feature of NMT is its utilisation of a new “common language” made up of numbers.Take the phrase “To thine own self be true” from Shakespeare’s Hamlet. First, each word of the phrase would be encoded into numbers (called a vector): 1, 2, 3, 4, 5, 6. This string of numbers then enters the neural network, as seen here on the left. It is here in the hidden layers, where the magic happens. Based on its learnt language rules, the system finds the most appropriate words in German. The numbers 7, 8, 9, 10, 11 are produced, which correspond to the words in the German target sentence. These numbers are then decoded into the target language: “Zu dir selber treu sein”.

Essentially, the system translates words into its own language, and then “thinks” about the best way to make them into a comprehensible sentence based on what it already knows – much like the human brain.

Understanding Context

You shall know a word by the company it keeps.

John R. Firth, 1957, the father of contextual linguistics

Much like a brain deciphering pieces of information, the artificial neural network looks at the information it hasbeen given and generates the next word, based on its neighbouring words. Over time, it “learns” which words to focus on, and where to make the best contextual connections based on previous examples. This process is a form of “deep learning” and allows translation systems to continuously learn and improve as time goes on. In NMT, deciphering context is called “alignment” and happens in the attention mechanism, which is situated between the encoder and decoder in the machine’s system.

The Process of Alignment. Alignment occurs in the attention mechanism of the artificial neural network and works to deduce the context of a word.

| Alana Cullen | CC-BY-SA

Of course, machines aren’t perfect. When translated back into English, the phrase becomes “Be true to yourself”, not quite capturing the tone or historical register of Shakespeare writing in Tudor England. The literal word-for-word translation would be “Sei deinem eigenen Selbst treu”, but in German, Shakespeare's words are usually translated by humans as “Sei dir selbst treu”.

The Process of Alignment. Alignment occurs in the attention mechanism of the artificial neural network and works to deduce the context of a word.

| Alana Cullen | CC-BY-SA

Of course, machines aren’t perfect. When translated back into English, the phrase becomes “Be true to yourself”, not quite capturing the tone or historical register of Shakespeare writing in Tudor England. The literal word-for-word translation would be “Sei deinem eigenen Selbst treu”, but in German, Shakespeare's words are usually translated by humans as “Sei dir selbst treu”. Human translation. When translated by a human, the links are much more complex than when the phrase is translated by a machine. This is due to a higher level of contextual understanding.

| Alana Cullen | CC-BY-SA

What is exciting here is how Google Translate has grasped the importance of the word “treu” in this context. Use of this German word implies that Google Translate has distinguished between “treu” meaning “true” in the sense of being "faithful" to one's true nature, and another German word for “true” such as “wahr” which means that something is factually correct.

Human translation. When translated by a human, the links are much more complex than when the phrase is translated by a machine. This is due to a higher level of contextual understanding.

| Alana Cullen | CC-BY-SA

What is exciting here is how Google Translate has grasped the importance of the word “treu” in this context. Use of this German word implies that Google Translate has distinguished between “treu” meaning “true” in the sense of being "faithful" to one's true nature, and another German word for “true” such as “wahr” which means that something is factually correct.Deep learning means that a mistranslated phrase is likely to be at least partially corrected a few weeks later. (Perhaps when this article is published, Google Translate will have corrected its mistakes!)

This continuous improvement alongside the machine's own language means NMT can be trained to perform “zero-shot translation”. This is the ability to map one language directly onto another, without having to use English as an intermediary. It would appear that for machines, as with humans, practice makes perfect.

Lost in Translation

Although machine translation has come a long way in recent years, it still fails to meet acceptable literary standards. Henry James emphasised the importance of understanding the text in its original language when he said the ideal literary translator must be a person “on whom nothing is lost.” And at least with literature, machines are still a long way from matching this ideal.NMT continues to struggle with rare words, proper nouns and highly technical language in literary translation, with only 25-30% of the output deemed to be of acceptable literary quality. One study on translating novels from German to English (see reference at the end: The Challenges of Using Neural Machine Translation for Literature) found that while there were few syntactic errors, the meaning of ambiguous words continued to be lost in translation. Despite these errors, however, researchers found that the quality of the text after machine translation was high enough for the story to be understood, and even be enjoyable to read. Another study on translating novels from English to Catalan yielded similarly impressive results, so that an average of 25% of native speakers thought the quality was similar to that produced by human translators (see reference a the end: NMT translates literature with 25% flawless rate.).

However, the machine system doesn’t perform equally with all language pairs. It particularly struggles with morphologically rich languages where there is lots of inflection, such as the Slavic languages. This is particularly noticeable when translating from a less complex language into a more complex one – meaning that NMT cannot be utilised as a global translation tool just yet.

Finding the Appropriate Voice

The biggest challenge that remains is finding the correct tone and register for the translated text. Peter Constantine, Director of the Literary Translation Program at the University of Connecticut, says machines must find the “appropriate voice” if they are to succeed in literary translation.What will the machine be mimicking? Is it going to do some beautiful and brilliant foreignisation, or is it going to do an amazing domestication, or is it going to make Chekhov sound like he was writing 10 minutes ago in London?

Peter Constantine (2019)

An Essential Collaboration

Clearly, despite the machine’s best efforts, the intrinsic ambiguity and flexibility of human language found in literary texts continues to need human management. NMT cannot replace human translators, but instead serves as a useful tool in translating literature.Collaboration between humans and machine translation systems is key. One answer to this problemis likely to come in the form of post-edited machine translation. Here, professional translators, with knowledge of the issues involved in machine translation, will edit and correct the machine’s first draft – similar to how an established translator would help edit an inexperienced translator’s work. Light post-editing machine translation consists of minor edits, such as spelling and grammar, whilefull post-editing will help clarify deeper issues,including sentence structure and writing style. Certainly, when it comes to translating literaturefull post-editing is likely to be required to generate the correct register and tone of the writing. Researchers translating a sci-fi novel from Scottish Gaelic to Irish found that this method was 31% faster than translating from scratch (see reference at the end: Post-editing Effort of a Novel With Statistical and Neural Machine Translation). And, when working on post-editing, translators’ productivity increased by 36% when compared to translating from scratch, generating an extra 182 translated words an hour.

With artificial intelligence playing an increasingly central role in all our lives, embracing it as a translation tool is essential to moving the industry forwards. Machine translation has come such a long way from where it first began andis set to aid literary translation through post-editing techniques – doing the tiresome legwork so that human translators can make the finishing touches. Not only does it reduce the burden on translators, but NMT also opens up multiple windows of opportunity to languages, from translating texts that have never been translated before, to providing an aid to language learning. Through our collaboration with NMT we can utilise it as a learning tool which is set to pave the way to making both literature and languages more accessible to all.

References:

Brownlee, J. 2017. A Gentle Introduction to Neural Machine Translation. [Accessed 9th July 2020].

Constantine, P. 2019. Google Translate Gets Voltaire: Literary Translation and the Age of Artificial Intelligence. Contemporary French and Francophone Studies. 23(4), pp. 471- 479.

Goldhammer, A. 2016. The Perils of Machine Translation. The Wire. [Accessed 14th July 2020].

Google Brain Team. 2016. A Neural Network for Machine Translation, at Production Scale. [Accessed 9th July 2020].

Gu, J., Wang, Y., Chu, K., Li. V. O. K. 2019. Improved Zero-shot Neural Machine Translation via Ignoring Spurious Correlations.arXiv. [Accessed 10th July 2020].

Iqram, S. 2020. Now you can transcribe speech with Google Translate. [Accessed 9th July 2020].

Jones, B., Andreas, J., Bauer, D., Hermann, K. M., and Knight, K. 2012. Semantics- Based Machine Translation with Hyperedge Replacement Grammars. Anthology. 12(1083), pp. 1359- 1376.

Kravariti, A. 2018. Machine Translation: NMT translates literature with 25% flawless rate. Translate Plus. [Accessed 14th July 2020].

Matusov, E. 2019. The Challenges of Using Neural Machine Translation for Literature. European Association for Machine Translation: Dublin, Ireland.

Maučec, M. S., and Donaj, G. 2019. Machine Translation and the Evaluation of Its Quality Recent Trends in Computational Intelligence. Intech Open.

Shofner, K. 2017. Statistical vs. Neural Machine Translation. ULG’s Language Solutions Blog. [Accessed 10th July 2020].

Systran. 2020. What is Machine Translation? Rule Based Translation vs. Statistical Machine Translation. [Accessed 9th July 202].

Toral, A., Wieling, M., and Way, A. 2018. Post-editing Effort of a Novel with Statistical and Neural Machine Translation. Frontiers in Digital Humanities. 5(9).

Turovsky, B. 2016. Ten years of Google Translate. [Accessed 9th July 2020].

Wong, S. 2016. Google Translate AI invents its own language to translate with. New Scientist. [Accessed 11th July].

Yamada, M. 2019. The impact of Google Neural Machine Translation on Post-editing by student translators. The Journal of Specialised Translation. 31, pp. 87- 95.

Zameo, S. 2019. Neural Machine Translation: tips and advantages for your digital translations. Text Master Go Global. [Accessed 14th July 2020].