Artificial Intelligence

Master or Minion?

On the insurmountable distinctions between humans and machines – an essay.

By Peter Glaser

In 1997, British cyberneticist Kevin Warwick opened his book “March of the Machines” with a dark vision of the future. By the middle of the 21st century, Warwick predicted an artificial intelligence (AI) network and superior robots would subjugate mankind, leaving humans to serve their machine masters solely as the chaos in the system.

Will machines initially feel a sense of shame that they were the creations of human beings, like human being's first reaction to learning of their ape ancestors? In the 1980s, American AI pioneer Edward Feigenbaum envisioned books communicating with one another in the libraries of tomorrow, autonomously propagating the knowledge they contained. “Maybe,” his colleague Marvin Minsky commented, “they’ll keep us as pets.” In 1956, Minsky helped plan a workshop at Dartmouth College in New Hampshire credited with introducing the term “artificial intelligence.”

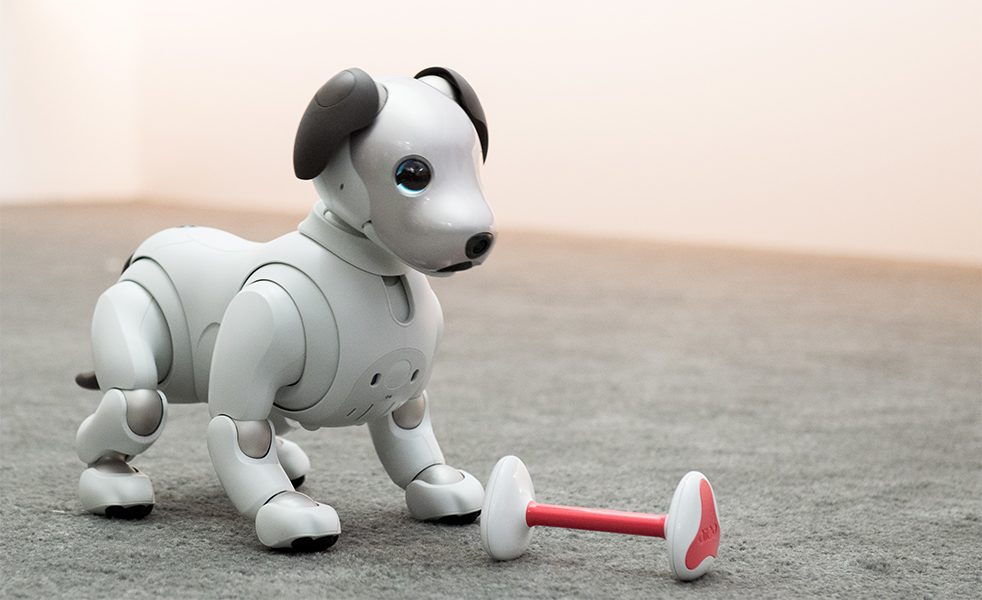

Machines serve as our pets today. Could we be theirs one day?

| Photo (detail): © picture alliance / dpa Themendienst / Andrea Warnecke

Conjecture about the computerized spread of intelligence was sensational. Soon, experts promised, all our problems would be solved by electronic brains. Reality hasn’t lived up to the hype for the most part. Some predictions have come true, though often decades later and with a very limited range, like the game of chess and pattern recognition. Recent technological advancements have breathed new life into these scenarios. Innovative data storage technologies, ever more powerful supercomputers, novel database concepts for processing huge amounts of data, millions in investment by internet corporations, and states racing for global dominance through “algorithmic advantages” have lent new credence to old fears about artificial intelligence.

Machines serve as our pets today. Could we be theirs one day?

| Photo (detail): © picture alliance / dpa Themendienst / Andrea Warnecke

Conjecture about the computerized spread of intelligence was sensational. Soon, experts promised, all our problems would be solved by electronic brains. Reality hasn’t lived up to the hype for the most part. Some predictions have come true, though often decades later and with a very limited range, like the game of chess and pattern recognition. Recent technological advancements have breathed new life into these scenarios. Innovative data storage technologies, ever more powerful supercomputers, novel database concepts for processing huge amounts of data, millions in investment by internet corporations, and states racing for global dominance through “algorithmic advantages” have lent new credence to old fears about artificial intelligence.

In May 2014, four leading scientists, Physics Nobel Prize laureate Frank Wilczek, cosmologist Max Tegmark, computer scientist Stuart Russell and the most famous physicist in the world, Stephan Hawking, published an appeal in the British newspaper The Independent. They cautioned against the complacency of writing off intelligent machines as mere science fiction: “Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last, unless we learn how to avoid the risks.”

Humanity on the Brink of Destruction?

It is striking that AI research is dominated by men, whose pathetic desire to create life may be influenced by a reverse form of penis envy we’ll call birth envy. It is the untameable desire to generate a computerized creation that not only equals but surpasses human beings to counter the creature that has roamed the earth in ever more sophisticated manifestations since life began some 400 million years ago. It is an infatuation with degrading mankind to an intermediary step on the evolutionary ladder that falls somewhere between the apes and the latest technological pride of creation.

This vision is called “strong” or “true” AI, and is based on the assumption that every function of human consciousness can be computerized, which in turn infers that the human brain works like a computer. All the impassioned warnings about machines running amok come together in the singularity. This is the moment a machine develops the ability to evolve autonomously, triggering runaway advances in performance. The sentinels predicting this rise of artificial superintelligence caution that these hyper-machines will develop their own sense of self once the process has begun, ultimately becoming intelligent, sentient beings.

Concern that wilful objects could destroy humanity is as old as humanity itself. It involves the fear, but also the hope that inanimate objects might come alive, perhaps with a little magical nudge. The ancient Egyptians placed funerary figurines called shabti – the ‘answerers’ – in crypts to do physical labour in the afterlife in place of the deceased. Historically, they represent the first idea of a computer, a minion charged with providing answers and following commands. The formulaic instructions inscribed on the tiny statues bear a striking resemblance to the algorithmic sequence of a modern computer program:

Hail, magical doll!

Should the guardians of the afterlife

Call upon me to work…

You shall take my place,

Whether it be to

Plough the fields,

fill the channels with water,

Or carry the sand…

The figure’s response is inscribed at the end:

I am here and obey your commands.

Providing answers in the ancient world: Egyptian shabtis, minions charged with working for the dead in the afterlife.

| Foto (Zuschnitt): © picture alliance / akg / Bildarchiv Steffens

Today we would call this “dialogue-oriented user prompting” – and mock the belief that a magical incantation could bring a clay figurine to life a superstition. But this superstition has found its way into modern life today. The believers in strong AI are convinced that someday, some way a sentient consciousness will emerge in a computer. They hypothesise that thinking can be reduced to the processing of information independent of substance and substratum. A brain, they argue, is not necessary; the human spirit can just as easily be uploaded into a computer. Marvin Minsky, who passed away in January 2016, saw AI as an attempt to cheat death.

Providing answers in the ancient world: Egyptian shabtis, minions charged with working for the dead in the afterlife.

| Foto (Zuschnitt): © picture alliance / akg / Bildarchiv Steffens

Today we would call this “dialogue-oriented user prompting” – and mock the belief that a magical incantation could bring a clay figurine to life a superstition. But this superstition has found its way into modern life today. The believers in strong AI are convinced that someday, some way a sentient consciousness will emerge in a computer. They hypothesise that thinking can be reduced to the processing of information independent of substance and substratum. A brain, they argue, is not necessary; the human spirit can just as easily be uploaded into a computer. Marvin Minsky, who passed away in January 2016, saw AI as an attempt to cheat death.

The Illusion of the Mechanical Self

Working at the Massachusetts Institute of Technology in 1965, computer scientist Joseph Weizenbaum created ELIZA, a natural language processing program that could simulate conversation. He programmed ELIZA to act like a psychotherapist talking to a patient. A person might type, “My mother is peculiar,” and the computer would respond, “How long has your mother been peculiar?” So did machines come to life? What is speaking to us, making us feel that a sentient being similar enough to a human being as to be interchangeable could easily develop inside a computer?

Before ELIZA, machines had only issued impersonal alerts – “oil pressure low”, “system failure”. Weizenbaum was dismayed to see how quickly the people ‘talking’ to ELIZA developed an emotional attachment to the algorithmically camouflaged machine. After a short time using the program, his secretary asked him to leave the room so she could share more intimate personal details. But a machine programmed by a person to say ‘I’ and ‘me’ cannot be understood to possess an actual self.

Seeing the brain as a computer does not correspond to what we actually know about the brain, human intelligence, or the individual self. It is a modern metaphor. In the initial creation story, human beings were made of clay into which a deity breathed the breath of life. A hydraulic model would later gain popularly, the idea that the flow of humours in the body was responsible for physical and intellectual functions. The 16th century saw the rise of automata made of feathers and cogs, inspiring leading thinkers of of the time, like French philosopher René Descartes, to postulate that human beings were complex machines. In the mid-19th century, German physicist Hermann von Helmholtz compared the human brain to a telegraph machine. Mathematician John von Neumann declared the human nervous system digital and drew new parallels between components of the computing machines of the time and components of the brain. But no one has ever found a database in the brain even slightly similar in function to the memory of a computer.

Very few artificial intelligence researchers are worried about the emergence of a power-hungry superintelligence. “The AI community as a whole is a long way away from building anything that could be a concern to the general public,” Dileep George, co-founder of Vicarious, an AI company, says reassuringly. “As researchers, we have an obligation to educate the public about the difference between Hollywood and reality.”

A machine with civil rights: humanoid Sophia can hold a conversation and show emotion - and she is the first robot to be granted citizenship. Saudi Arabia recognized her as a legal person at the end of 2017.

| Photo (detail): © picture alliance / Niu Bo / Imaginechina / dpa

Powered by 50 million dollars in start-up funding from investors like Mark Zuckerberg and Jeff Bezos, Vicarious is working on an algorithm that emulates the cognitive system of the human brain, a very ambitious goal. The largest artificial neuronal networks operating in computers today have around one billion cross-indexations, a thousand times that of their predecessors just a few years ago. But this is practically insignificant compared to the human brain. It is roughly equivalent to just one cubic millimetre of brain tissue, less than a voxel, the three-dimensional pixel used in computer tomography.

A machine with civil rights: humanoid Sophia can hold a conversation and show emotion - and she is the first robot to be granted citizenship. Saudi Arabia recognized her as a legal person at the end of 2017.

| Photo (detail): © picture alliance / Niu Bo / Imaginechina / dpa

Powered by 50 million dollars in start-up funding from investors like Mark Zuckerberg and Jeff Bezos, Vicarious is working on an algorithm that emulates the cognitive system of the human brain, a very ambitious goal. The largest artificial neuronal networks operating in computers today have around one billion cross-indexations, a thousand times that of their predecessors just a few years ago. But this is practically insignificant compared to the human brain. It is roughly equivalent to just one cubic millimetre of brain tissue, less than a voxel, the three-dimensional pixel used in computer tomography.

The complexity of the world is the main stumbling block in AI. A newborn emerges fully equipped with a system honed by evolution to deal with this complexity -- the senses, a handful of reflexes essential to its survival and, perhaps most important of all, exceptionally efficient learning mechanisms. These allow a human infant to change rapidly in response to stimuli so as better interact with the world around it, even though it is not the same one that confronted its far-flung forbears.

Computers can’t even count to two; they only know ones and zeros, and have to muddle through using a combination of stupidity and speed, maybe a few rules of thumb we would call heuristics, and a whole mess of advanced mathematics (the key word here is neuronal networks). To truly understand just basics of how the human brain operates the human intellect, it may not be enough to know the current state of all 86 trillion neurons and their 100 trillion synapses, the varying intensity with which they are linked, and the state of more than 1,000 proteins that exist at each and every synapse. We may also need to understand how the activity in the brain at any given moment contributes to the integrity of the entire system.

Even then, we would still be faced with the uniqueness of every brain derived from the unique life and experience of every individual human being on earth.