Beyond Bias

About the project

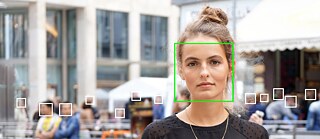

AI systems are mirrors of their training data. If we don’t take action now, the generative AI models that will shape tomorrow’s creative tools will continue to perpetuate the biases, limitations, and inequities embedded in digital representation today.

In response, Goethe-Institut, in collaboration with Gooey.AI, is proud to announce a varied initiative that reimagines a future where generative AI embraces and reflects the rich diversity of human experiences. By crafting culturally representative image datasets and fine-tuning AI models, we aim to confront biases head-on and develop tools that are both culturally sensitive and technically innovative.

Through participatory research, strategic partnerships, and hands-on creation, this project seeks to champion transparency, inclusivity, and community-driven solutions. It brings together voices from creative, legal, archival, and research domains to reshape the trajectory of AI development. By working together, we will design accessible, culturally nuanced datasets and fine-tuned AI models, empowering anyone to generate new and representative visuals. This is a step toward an equitable and inclusive digital future for creators everywhere.

Our Goals

- Engage digitally under-represented communities: Convene a diverse network of cultural, academic, and legal practitioners to address how various communities (i.e. gender-related minorities, artisanal communities) can be fairly represented in generative AI.

- Forge Strategic Partnerships: Collaborate with German and Indian institutions to amplify our global impact.

- Create Public Tools: Develop replicable AI workflows for creators everywhere to train and fine-tune AI models that are best tailored to them.

- Empower Public Use: Make these custom AI models available for public use on Gooey.AI, huggingface and elsewhere so anyone can make art in styles previously impossible.

- Expand Image Sets: Facilitate making under-represented image sets available to frontier AI image generation models (eg. OpenAI’s DallE, StableDiffusion, FLUX) so the next generation of GenAI tools fairly represent more cultures.