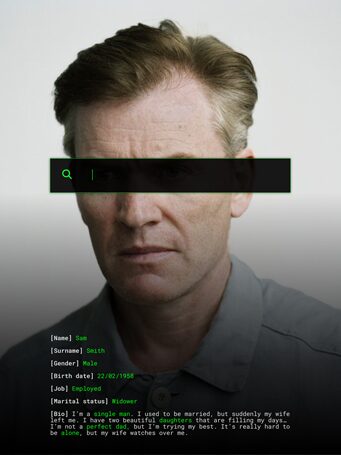

How is bias coded into our technology? How can we infiltrate the opaque mechanisms upon which technological systems are built and counter the perpetuation of bias? The Open Call, the Festival Revisions. Decoding Technological Bias and the film series Filmic Explorations are part of the project IMAGE + BIAS that critically engages with the cultural realities being increasingly determined by imperceptible technologies.

The festival Revisions. Decoding Technological took place from June 10 through 18, 2021 and brought a network of luminaries together to share new perspectives and rewrite new visions advocating for justice and reclaiming power.

From March 1 through May 23, 2021, we invited artists, designers, and the general public to submit creative representations on the subject of bias and technology’s growing ability to alter people’s visual perception of reality.

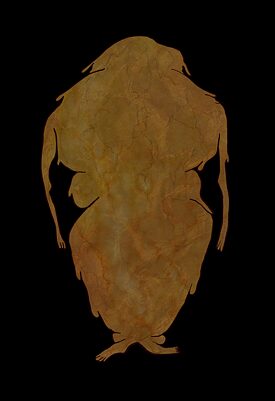

An international jury comprised of artists, curators, and researchers had the difficult task of selecting the ten best works* from over 150 submissions.

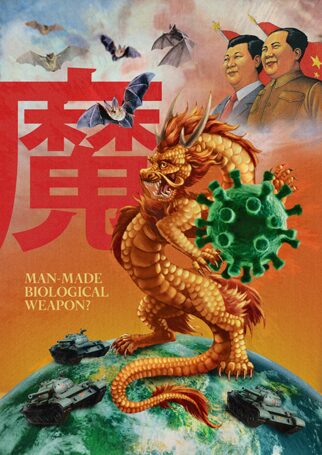

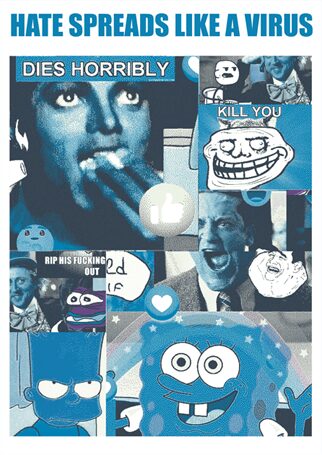

* These AR art pieces contain sensitive or violent content which some people may find offensive or disturbing.